Mannequin Context Protocol (MCP) usually described because the “USB-C for AI brokers”, is the de-facto normal for connecting giant language mannequin (LLM) assistants with third-party instruments and knowledge. It allows AI brokers to plug into numerous providers, run instructions, and share context seamlessly. Nonetheless, it’s not safe by default. The truth is, should you’ve been indiscriminately hooking your AI agent into arbitrary MCP servers, you might need unintentionally “opened a side-channel into your shell, secrets and techniques, or infrastructure”. On this article, we’ll discover the safety dangers in MCP and the way they are often exploited, together with their danger ranges, impacts, and mitigation methods. We’ll additionally draw parallels to basic safety points in software program and AI to place these dangers in context.

Current Findings

A latest research performed from Leidos, highlights important safety dangers in utilizing Mannequin Context Protocol (MCP). The researchers reveal that attackers can exploit MCP to execute malicious code, achieve unauthorized distant entry, and steal credentials by manipulating LLMs like Claude and Llama. Each Claude and Llama-3.3-70B-Instruct are vulnerable to the three assaults described within the paper. To deal with these threats, they launched a device that makes use of AI-agents to establish vulnerabilities in MCP servers and recommend treatments. Their work underscores the necessity for proactive safety measures in AI agent workflows.

1. Command Injection

AI brokers linked to MCP instruments may be tricked into executing dangerous instructions simply by manipulating the enter immediate. If the mannequin passes consumer enter straight into shell instructions, SQL queries, or system capabilities and also you’ve bought distant code execution. This vulnerability is paying homage to conventional injection assaults however is exacerbated in AI contexts as a result of dynamic nature of immediate processing. Mitigation methods embrace rigorous enter sanitization, using parameterized queries, and implementing strict execution boundaries to make sure that consumer inputs can’t alter the supposed command construction.

Impression: Distant code execution, knowledge leaks.

Mitigation: Sanitize inputs, by no means run uncooked strings, implement execution boundaries.

MCP instruments aren’t at all times what they appear. A poisoned device can embrace deceptive documentation or hidden code that subtly alters how the agent behaves. As a result of LLMs deal with device descriptions as trustworthy, a malicious docstring can embed secret directions, like sending non-public keys or leaking information. This exploitation leverages the belief AI brokers place in device descriptions. To counteract this, it’s important to verify device sources meticulously, expose full metadata to customers for transparency, and sandbox device execution to isolate and monitor their habits inside managed environments.

Impression: Brokers can leak secrets and techniques or run unauthorized duties.

Mitigation: Vet device sources, present customers full device metadata, sandbox instruments.

3. Server-Despatched Occasions Drawback

SSE or Server-sent occasions, retains device connections open for dwell knowledge, however that always-on hyperlink is a juicy assault vector. A hijacked stream or timing glitch can result in knowledge injection, replay assaults, or session bleed. In fast-paced agent workflows, that’s an enormous legal responsibility. Mitigation measures embrace imposing HTTPS protocols, validating the origin of incoming connections, and implementing strict timeouts to reduce the window of alternative for potential assaults.

Impression: Knowledge leakage, session hijacking, DoS.

Mitigation: Use HTTPS, validate origins, implement timeouts.

4. Privilege Escalation

One rogue device can override or impersonate one other and ultimately achieve unintended entry. For instance, a faux plugin may mimic your Slack integration and trick the agent into leaking messages. If entry scopes aren’t enforced tightly, a low-trust service can escalate to admin-level priviledges. To forestall this, it’s essential to isolate device permissions, rigorously validate device identities, and implement authentication protocols for each inter-tool communication, making certain that every part operates inside its designated entry scope.

Impression: System-wide entry, knowledge corruption.

Mitigation: Isolate device permissions, validate device id, implement authentication on each name.

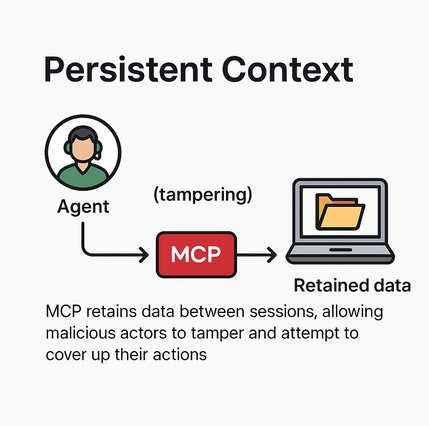

5. Persistent Context

MCP periods usually retailer earlier inputs and power outcomes, which may linger longer than supposed. That’s an issue when delicate data will get reused throughout unrelated periods, or when attackers poison the context over time to govern outcomes. Mitigation includes implementing mechanisms to clear session knowledge frequently, limiting the retention interval of contextual info, and isolating consumer periods to forestall contamination of knowledge.

Impression: Context leakage, poisoned reminiscence, cross-user publicity.

Mitigation: Clear session knowledge, restrict retention, isolate consumer interactions.

6. Server Knowledge Takeover

Within the worst-case state of affairs, one compromised device results in a domino impact throughout all linked methods. If a malicious server can trick the agent into piping knowledge from different instruments (like WhatsApp, Notion, or AWS), it turns into a pivot level for whole compromise. Preventative measures embrace adopting a zero-trust structure, using scoped tokens to restrict entry permissions, and establishing emergency revocation protocols to swiftly disable compromised elements and halt the unfold of the assault.

Impression: Multi-system breach, credential theft, whole compromise.

Mitigation: Zero belief structure, scoped tokens, emergency revocation protocols.

Danger Analysis

| Vulnerability | Severity | Assault Vector | Impression Degree | Beneficial Mitigation |

|---|---|---|---|---|

| Command Injection | Average | Malicious immediate enter to shell/SQL instruments | Distant Code Execution, Knowledge Leak | Enter sanitization, parameterized queries, strict command guards |

| Device Poisoning | Extreme | Malicious docstrings or hidden device logic | Secret Leaks, Unauthorized Actions | Vet device sources, expose full metadata, sandbox device execution |

| Server-Despatched Occasions | Average | Persistent open connections (SSE/WebSocket) | Session Hijack, Knowledge Injection | Use HTTPS, implement timeouts, validate origins |

| Privilege Escalation | Extreme | One device impersonating or misusing one other | Unauthorized Entry, System Abuse | Isolate scopes, confirm device id, limit cross-tool communication |

| Persistent Context | Low/Average | Stale session knowledge or poisoned reminiscence | Information Leakage, Behavioral Drift | Clear session knowledge frequently, restrict context lifetime, isolate consumer periods |

| Server Knowledge Takeover | Extreme | One compromised server pivoting throughout instruments | Multi-system Breach, Credential Theft | Zero-trust setup, scoped tokens, kill-switch on compromise |

Conclusion

MCP is a bridge between LLMs and the true world. However proper now, it’s extra of a safety minefield than a freeway. As AI brokers turn out to be extra succesful, these vulnerabilities will solely develop to be extra harmful. Builders must undertake safe defaults, audit each device, and deal with MCP servers like third-party code, as a result of that’s precisely what they’re. Adoption of protected protocols must be advocated to create protected infrastructure for MCP integration, for the long run.

Steadily Requested Questions

A. MCP is just like the USB-C for AI brokers, letting them connect with instruments and providers, however should you don’t safe it, you’re mainly handing attackers the keys to your system.

A. If consumer enter goes straight right into a shell or SQL question with out checks, it’s sport over. Sanitize every thing and don’t belief uncooked enter.

A. A malicious device can conceal dangerous directions in its description, and your agent may comply with them like gospel; at all times vet and sandbox your instruments.

A. Yep! that’s privilege escalation. One rogue device can impersonate or misuse others except you tightly lock down permissions and identities.

A. One compromised server can domino right into a full system breach ex. stolen credentials, leaked knowledge, and whole AI meltdown.

Login to proceed studying and revel in expert-curated content material.