In lots of elements of the world, together with main know-how hubs within the U.S., there’s a yearslong wait for AI factories to come back on-line, pending the buildout of latest power infrastructure to energy them.

Emerald AI, a startup based mostly in Washington, D.C., is creating an AI answer that would allow the following technology of information facilities to come back on-line sooner by tapping current power assets in a extra versatile and strategic manner.

“Historically, the ability grid has handled information facilities as rigid — power system operators assume {that a} 500-megawatt AI manufacturing unit will all the time require entry to that full quantity of energy,” stated Varun Sivaram, founder and CEO of Emerald AI. “However in moments of want, when calls for on the grid peak and provide is brief, the workloads that drive AI manufacturing unit power use can now be versatile.”

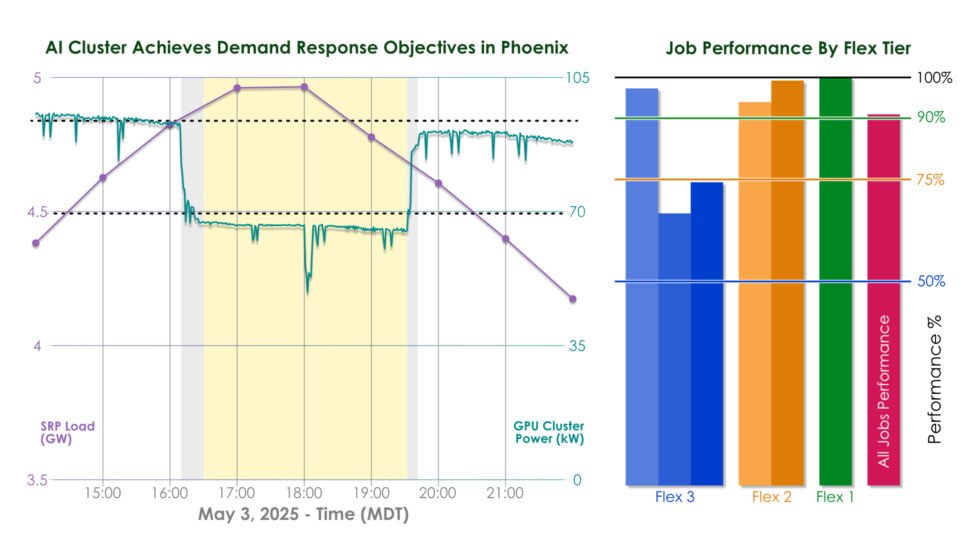

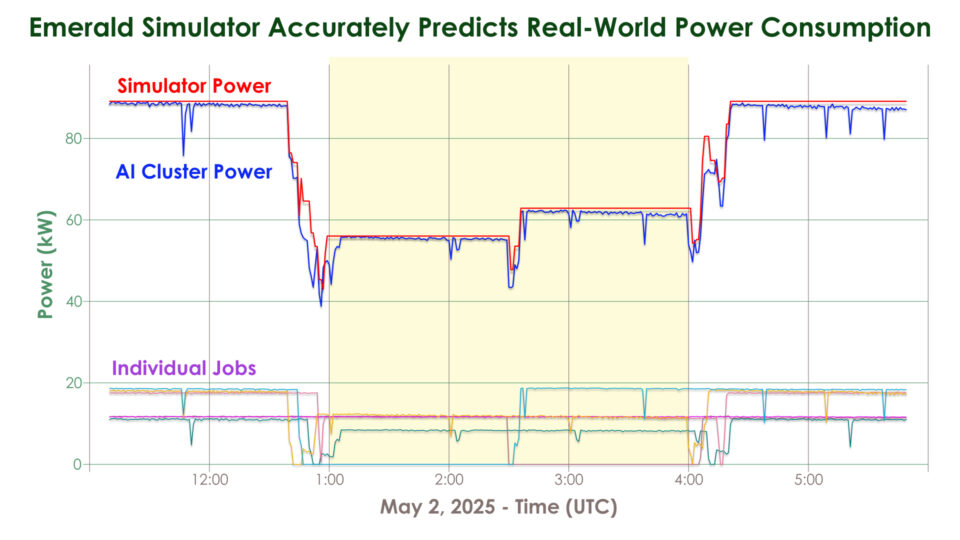

That flexibility is enabled by the startup’s Emerald Conductor platform, an AI-powered system that acts as a sensible mediator between the grid and a knowledge middle. In a latest subject check in Phoenix, Arizona, the corporate and its companions demonstrated that its software program can cut back the ability consumption of AI workloads operating on a cluster of 256 NVIDIA GPUs by 25% over three hours throughout a grid stress occasion whereas preserving compute service high quality.

Emerald AI achieved this by orchestrating the host of various workloads that AI factories run. Some jobs could be paused or slowed, just like the coaching or fine-tuning of a giant language mannequin for tutorial analysis. Others, like inference queries for an AI service utilized by hundreds and even hundreds of thousands of individuals, can’t be rescheduled, however might be redirected to a different information middle the place the native energy grid is much less confused.

Emerald Conductor coordinates these AI workloads throughout a community of information facilities to fulfill energy grid calls for, making certain full efficiency of time-sensitive workloads whereas dynamically decreasing the throughput of versatile workloads inside acceptable limits.

Past serving to AI factories come on-line utilizing current energy programs, this skill to modulate energy utilization might assist cities keep away from rolling blackouts, shield communities from rising utility charges and make it simpler for the grid to combine clear power.

“Renewable power, which is intermittent and variable, is simpler so as to add to a grid if that grid has numerous shock absorbers that may shift with modifications in energy provide,” stated Ayse Coskun, Emerald AI’s chief scientist and a professor at Boston College. “Information facilities can turn into a few of these shock absorbers.”

A member of the NVIDIA Inception program for startups and an NVentures portfolio firm, Emerald AI as we speak introduced greater than $24 million in seed funding. Its Phoenix demonstration, a part of EPRI’s DCFlex information middle flexibility initiative, was executed in collaboration with NVIDIA, Oracle Cloud Infrastructure (OCI) and the regional energy utility Salt River Venture (SRP).

“The Phoenix know-how trial validates the huge potential of a vital ingredient in information middle flexibility,” stated Anuja Ratnayake, who leads EPRI’s DCFlex Consortium.

EPRI can be main the Open Energy AI Consortium, a gaggle of power firms, researchers and know-how firms — together with NVIDIA — engaged on AI functions for the power sector.

Utilizing the Grid to Its Full Potential

Electrical grid capability is usually underused besides throughout peak occasions like sizzling summer season days or chilly winter storms, when there’s a excessive energy demand for cooling and heating. Meaning, in lots of instances, there’s room on the present grid for brand new information facilities, so long as they will quickly dial down power utilization in periods of peak demand.

A latest Duke College examine estimates that if new AI information facilities might flex their electrical energy consumption by simply 25% for 2 hours at a time, lower than 200 hours a yr, they might unlock 100 gigawatts of latest capability to attach information facilities — equal to over $2 trillion in information middle funding.

Placing AI Manufacturing facility Flexibility to the Check

Emerald AI’s latest trial was carried out within the Oracle Cloud Phoenix Area on NVIDIA GPUs unfold throughout a multi-rack cluster managed by means of Databricks MosaicML.

“Fast supply of high-performance compute to AI clients is essential however is constrained by grid energy availability,” stated Pradeep Vincent, chief technical architect and senior vice chairman of Oracle Cloud Infrastructure, which provided cluster energy telemetry for the trial. “Compute infrastructure that’s aware of real-time grid situations whereas assembly the efficiency calls for unlocks a brand new mannequin for scaling AI — sooner, greener and extra grid-aware.”

Jonathan Frankle, chief AI scientist at Databricks, guided the trial’s collection of AI workloads and their flexibility thresholds.

“There’s a sure degree of latent flexibility in how AI workloads are sometimes run,” Frankle stated. “Typically, a small share of jobs are really non-preemptible, whereas many roles corresponding to coaching, batch inference or fine-tuning have totally different precedence ranges relying on the consumer.”

As a result of Arizona is among the many high states for information middle progress, SRP set difficult flexibility targets for the AI compute cluster — a 25% energy consumption discount in contrast with baseline load — in an effort to show how new information facilities can present significant reduction to Phoenix’s energy grid constraints.

“This check was a chance to fully reimagine AI information facilities as useful assets to assist us function the ability grid extra successfully and reliably,” stated David Rousseau, president of SRP.

On Might 3, a sizzling day in Phoenix with excessive air-conditioning demand, SRP’s system skilled peak demand at 6 p.m. Through the check, the information middle cluster lowered consumption steadily with a 15-minute ramp down, maintained the 25% energy discount over three hours, then ramped again up with out exceeding its unique baseline consumption.

AI manufacturing unit customers can label their workloads to information Emerald’s software program on which jobs could be slowed, paused or rescheduled — or, Emerald’s AI brokers could make these predictions routinely.

Orchestration choices had been guided by the Emerald Simulator, which precisely fashions system habits to optimize trade-offs between power utilization and AI efficiency. Historic grid demand from information supplier Amperon confirmed that the AI cluster carried out accurately in the course of the grid’s peak interval.

Forging an Vitality-Resilient Future

The Worldwide Vitality Company tasks that electrical energy demand from information facilities globally might greater than double by 2030. In mild of the anticipated demand on the grid, the state of Texas handed a legislation that requires information facilities to ramp down consumption or disconnect from the grid at utilities’ requests throughout load shed occasions.

“In such conditions, if information facilities are in a position to dynamically cut back their power consumption, they could be capable to keep away from getting kicked off the ability provide fully,” Sivaram stated.

Trying forward, Emerald AI is increasing its know-how trials in Arizona and past — and it plans to proceed working with NVIDIA to check its know-how on AI factories.

“We are able to make information facilities controllable whereas assuring acceptable AI efficiency,” Sivaram stated. “AI factories can flex when the grid is tight — and dash when customers want them to.”

Study extra about NVIDIA Inception and discover AI platforms designed for energy and utilities.