Throughout the globe, AI factories are rising — huge new information facilities constructed to not serve up internet pages or electronic mail, however to coach and deploy intelligence itself. Web giants have invested billions in cloud-scale AI infrastructure for his or her clients. Corporations are racing to construct AI foundries that can spawn the subsequent era of services and products. Governments are investing too, desperate to harness AI for personalised drugs and language providers tailor-made to nationwide populations.

Welcome to the age of AI factories — the place the principles are being rewritten and the wiring doesn’t look something just like the outdated web. These aren’t typical hyperscale information facilities. They’re one thing else solely. Consider them as high-performance engines stitched collectively from tens to tons of of 1000’s of GPUs — not simply constructed, however orchestrated, operated and activated as a single unit. And that orchestration? It’s the entire sport.

This large information middle has develop into the brand new unit of computing, and the best way these GPUs are related defines what this unit of computing can do. One community structure gained’t lower it. What’s wanted is a layered design with bleeding-edge applied sciences — like co-packaged optics that after appeared like science fiction.

The complexity isn’t a bug; it’s the defining characteristic. AI infrastructure is diverging quick from every thing that got here earlier than it, and if there isn’t rethinking on how the pipes join, scale breaks down. Get the community layers fallacious, and the entire machine grinds to a halt. Get it proper, and achieve extraordinary efficiency.

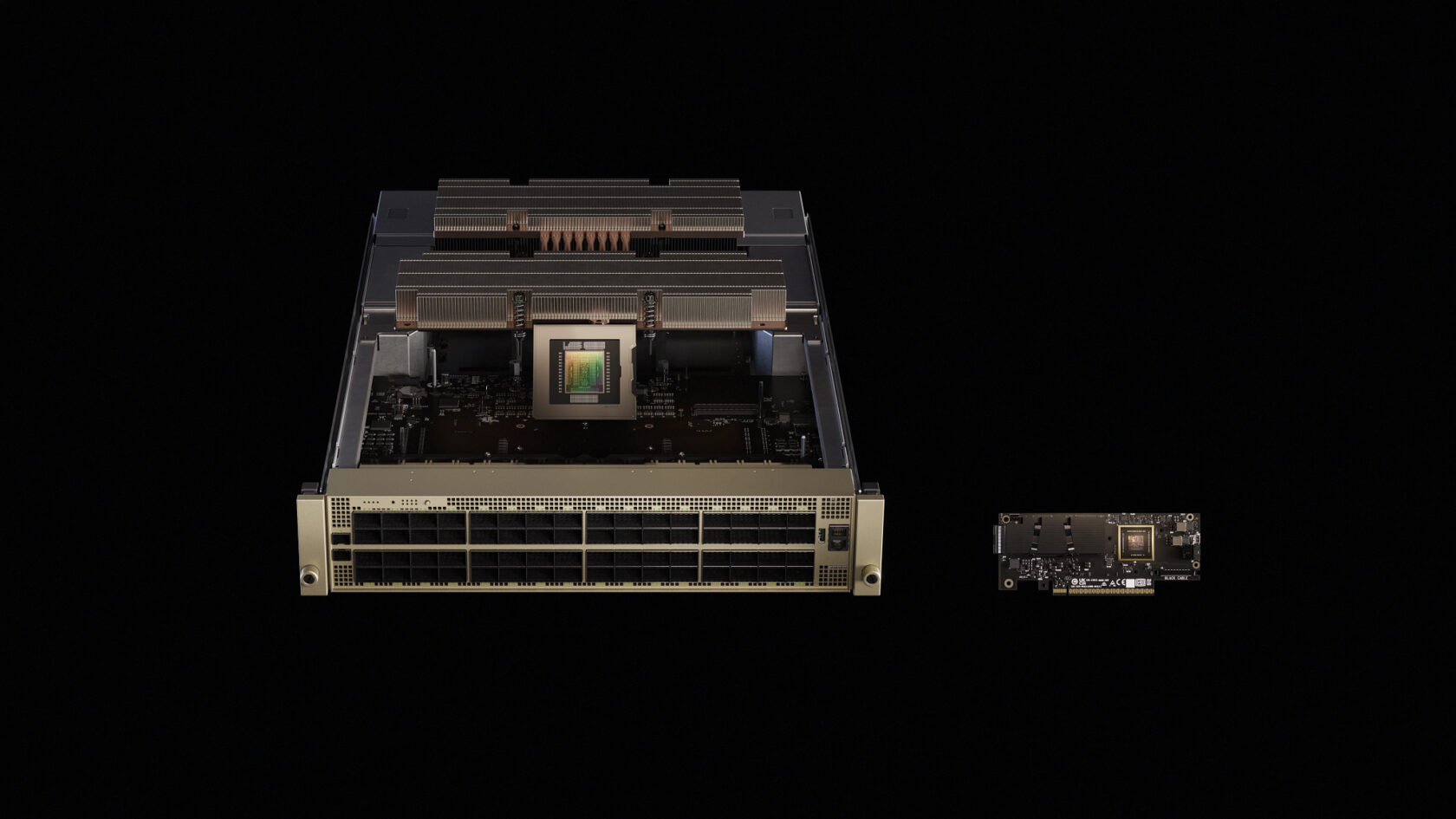

With that shift comes weight — actually. A decade in the past, chips had been constructed to be smooth and light-weight. Now, the innovative seems just like the multi‑hundred‑pound copper backbone of a server rack. Liquid-cooled manifolds. Customized busbars. Copper spines. AI now calls for huge, industrial-scale {hardware}. And the deeper the fashions go, the extra these machines scale up, and out.

With that shift comes weight — actually. A decade in the past, chips had been constructed to be smooth and light-weight. Now, the innovative seems just like the multi‑hundred‑pound copper backbone of a server rack. Liquid-cooled manifolds. Customized busbars. Copper spines. AI now calls for huge, industrial-scale {hardware}. And the deeper the fashions go, the extra these machines scale up, and out.

The NVIDIA NVLink backbone, for instance, is constructed from over 5,000 coaxial cables — tightly wound and exactly routed. It strikes extra information per second than your complete web. That’s 130 TB/s of GPU-to-GPU bandwidth, totally meshed.

This isn’t simply quick. It’s foundational. The AI super-highway now lives contained in the rack.

The Information Middle Is the Laptop

Coaching the fashionable massive language fashions (LLMs) behind AI isn’t about burning cycles on a single machine. It’s about orchestrating the work of tens and even tons of of 1000’s of GPUs which are the heavy lifters of AI computation.

These techniques depend on distributed computing, splitting huge calculations throughout nodes (particular person servers), the place every node handles a slice of the workload. In coaching, these slices — sometimes huge matrices of numbers — should be usually merged and up to date. That merging happens by way of collective operations, similar to “all-reduce” (which mixes information from all nodes and redistributes the outcome) and “all-to-all” (the place every node exchanges information with each different node).

These processes are prone to the velocity and responsiveness of the community — what engineers name latency (delay) and bandwidth (information capability) — inflicting stalls in coaching.

For inference — the method of working educated fashions to generate solutions or predictions — the challenges flip. Retrieval-augmented era techniques, which mix LLMs with search, demand real-time lookups and responses. And in cloud environments, multi-tenant inference means conserving workloads from completely different clients working easily, with out interference. That requires lightning-fast, high-throughput networking that may deal with huge demand with strict isolation between customers.

Conventional Ethernet was designed for single-server workloads — not for the calls for of distributed AI. Tolerating jitter and inconsistent supply had been as soon as acceptable. Now, it’s a bottleneck. Conventional Ethernet change architectures had been by no means designed for constant, predictable efficiency — and that legacy nonetheless shapes their newest generations.

Distributed computing requires a scale-out infrastructure constructed for zero-jitter operation — one that may deal with bursts of utmost throughput, ship low latency, keep predictable and constant RDMA efficiency, and isolate community noise. This is the reason InfiniBand networking is the gold customary for high-performance computing supercomputers and AI factories.

With NVIDIA Quantum InfiniBand, collective operations run contained in the community itself utilizing Scalable Hierarchical Aggregation and Discount Protocol know-how, doubling information bandwidth for reductions. It makes use of adaptive routing and telemetry-based congestion management to unfold flows throughout paths, assure deterministic bandwidth and isolate noise. These optimizations let InfiniBand scale AI communication with precision. It’s why NVIDIA Quantum infrastructure connects the vast majority of the techniques on the TOP500 checklist of the world’s strongest supercomputers, demonstrating 35% progress in simply two years.

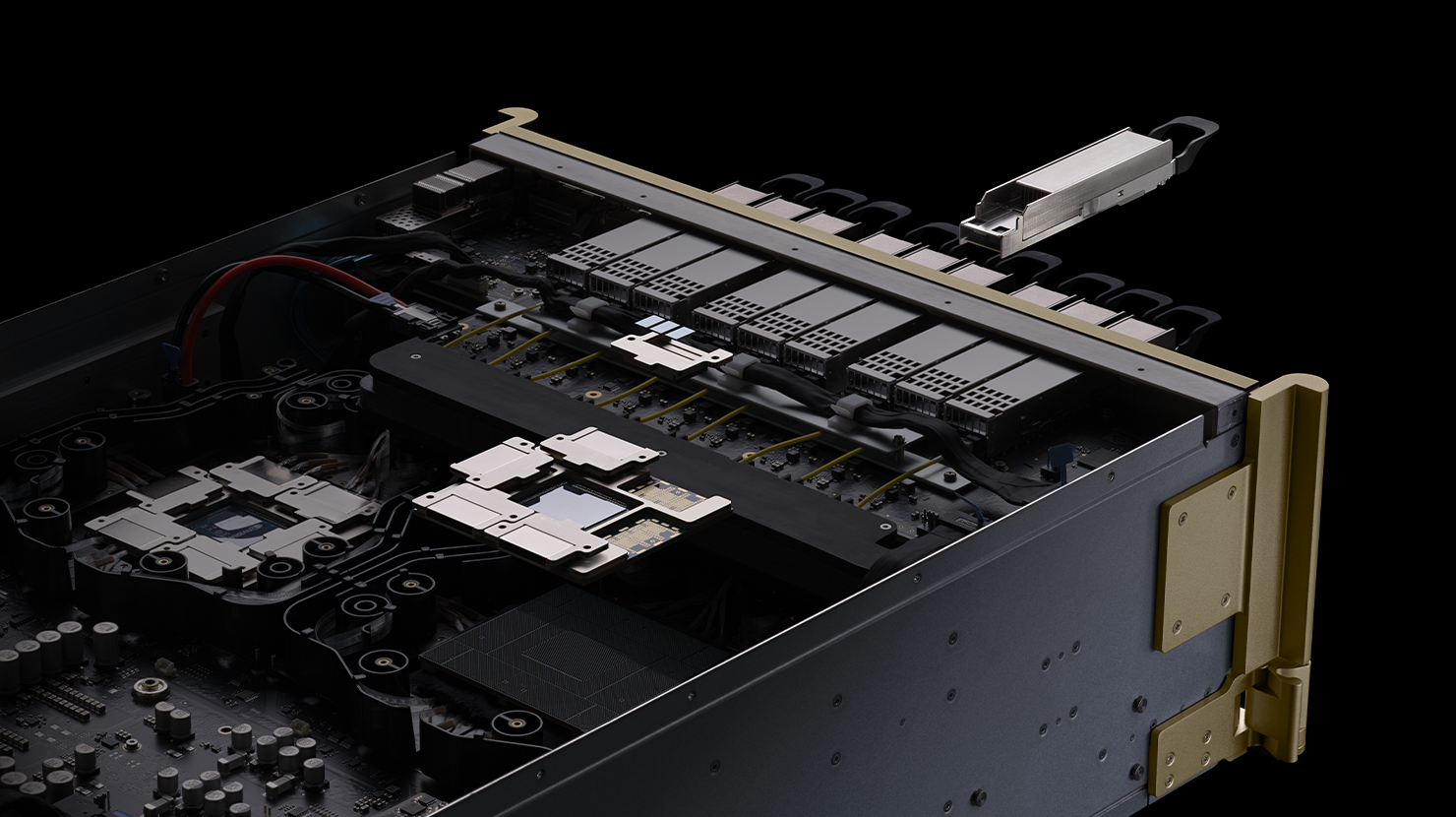

For clusters spanning dozens of racks, NVIDIA Quantum‑X800 Infiniband switches push InfiniBand to new heights. Every change offers 144 ports of 800 Gbps connectivity, that includes hardware-based SHARPv4, adaptive routing and telemetry-based congestion management. The platform integrates co‑packaged silicon photonics to attenuate the space between electronics and optics, lowering energy consumption and latency. Paired with NVIDIA ConnectX-8 SuperNICs delivering 800 Gb/s per GPU, this cloth hyperlinks trillion-parameter fashions and drives in-network compute.

However hyperscalers and enterprises have invested billions of their Ethernet software program infrastructure. They want a fast path ahead that makes use of the present ecosystem for AI workloads. Enter NVIDIA Spectrum‑X: a brand new form of Ethernet purpose-built for distributed AI.

Spectrum‑X Ethernet: Bringing AI to the Enterprise

Spectrum‑X reimagines Ethernet for AI. Launched in 2023 Spectrum‑X delivers lossless networking, adaptive routing and efficiency isolation. The SN5610 change, primarily based on the Spectrum‑4 ASIC, helps port speeds as much as 800 Gb/s and makes use of NVIDIA’s congestion management to take care of 95% information throughput at scale.

Spectrum‑X is totally requirements‑primarily based Ethernet. Along with supporting Cumulus Linux, it helps the open‑supply SONiC community working system — giving clients flexibility. A key ingredient is NVIDIA SuperNICs — primarily based on NVIDIA BlueField-3 or ConnectX-8 — which offer as much as 800 Gb/s RoCE connectivity and offload packet reordering and congestion administration.

Spectrum-X brings InfiniBand’s greatest improvements — like telemetry-driven congestion management, adaptive load balancing and direct information placement — to Ethernet, enabling enterprises to scale to tons of of 1000’s of GPUs. Giant-scale techniques with Spectrum‑X, together with the world’s most colossal AI supercomputer, have achieved 95% information throughput with zero utility latency degradation. Normal Ethernet materials would ship solely ~60% throughput resulting from move collisions.

A Portfolio for Scale‑Up and Scale‑Out

No single community can serve each layer of an AI manufacturing facility. NVIDIA’s strategy is to match the proper cloth to the proper tier, then tie every thing along with software program and silicon.

NVLink: Scale Up Contained in the Rack

Inside a server rack, GPUs want to speak to one another as in the event that they had been completely different cores on the identical chip. NVIDIA NVLink and NVLink Swap lengthen GPU reminiscence and bandwidth throughout nodes. In an NVIDIA GB300 NVL72 system, 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell Extremely GPUs are related in a single NVLink area, with an combination bandwidth of 130 TB/s. NVLink Swap know-how additional extends this cloth: a single GB300 NVL72 system can provide 130 TB/s of GPU bandwidth, enabling clusters to assist 9x the GPU depend of a single 8‑GPU server. With NVLink, your complete rack turns into one massive GPU.

Photonics: The Subsequent Leap

To succeed in million‑GPU AI factories, the community should break the facility and density limits of pluggable optics. NVIDIA Quantum-X and Spectrum-X Photonics switches combine silicon photonics immediately into the change package deal, delivering 128 to 512 ports of 800 Gb/s with complete bandwidths starting from 100 Tb/s to 400 Tb/s. These switches provide 3.5x extra energy effectivity and 10x higher resiliency in contrast with conventional optics, paving the best way for gigawatt‑scale AI factories.

Delivering on the Promise of Open Requirements

Spectrum‑X and NVIDIA Quantum InfiniBand are constructed on open requirements. Spectrum‑X is totally requirements‑primarily based Ethernet with assist for open Ethernet stacks like SONiC, whereas NVIDIA Quantum InfiniBand and Spectrum-X conform to the InfiniBand Commerce Affiliation’s InfiniBand and RDMA over Converged Ethernet (RoCE) specs. Key components of NVIDIA’s software program stack — together with NCCL and DOCA libraries — run on quite a lot of {hardware}, and companions similar to Cisco, Dell Applied sciences, HPE and Supermicro combine Spectrum-X into their techniques.

Open requirements create the muse for interoperability, however real-world AI clusters require tight optimization throughout your complete stack — GPUs, NICs, switches, cables and software program. Distributors that put money into finish‑to‑finish integration ship higher latency and throughput. SONiC, the open‑supply community working system hardened in hyperscale information facilities, eliminates licensing and vendor lock‑in and permits intense customization, however operators nonetheless select goal‑constructed {hardware} and software program bundles to satisfy AI’s efficiency wants. In observe, open requirements alone don’t ship deterministic efficiency; they want innovation layered on high.

Towards Million‑GPU AI Factories

AI factories are scaling quick. Governments in Europe are constructing seven nationwide AI factories, whereas cloud suppliers and enterprises throughout Japan, India and Norway are rolling out NVIDIA‑powered AI infrastructure. The following horizon is gigawatt‑class services with one million GPUs. To get there, the community should evolve from an afterthought to a pillar of AI infrastructure.

The lesson from the gigawatt information middle age is easy: the info middle is now the pc. NVLink stitches collectively GPUs contained in the rack. NVIDIA Quantum InfiniBand scales them throughout it. Spectrum-X brings that efficiency to broader markets. Silicon photonics makes it sustainable. Every little thing is open the place it issues, optimized the place it counts.