The standard of knowledge used is the cornerstone of any knowledge science challenge. Dangerous high quality of knowledge results in misguided fashions, deceptive insights, and expensive enterprise selections. On this complete information, we’ll discover the development of a strong and concise knowledge cleansing and validation pipeline utilizing Python.

What’s a Information Cleansing and Validation Pipeline?

An information cleansing and validation pipeline is an automatic workflow that systematically processes uncooked knowledge to make sure its high quality meets accepted standards earlier than it’s subjected to evaluation. Consider it as a high quality management system on your knowledge:

- Detecting and coping with lacking values – Detects gaps in your dataset and applies an applicable therapy technique

- Validates knowledge sorts and codecs – Makes positive every discipline incorporates info of the anticipated sort

- Identifies and removes outliers – Detects outliers that will skew your evaluation

- Enforces enterprise guidelines – Applies domain-specific constraints and validation logic

- Maintains lineage – Tracks what transformations have been made and when

The pipeline basically acts as a gatekeeper to be sure that solely clear and validated knowledge flows into your analytics and machine studying workflows.

Why Information Cleansing Pipelines?

A number of the key benefits of automated cleansing pipelines are:

- Consistency and Reproducibility: Handbook strategies can introduce human error and inconsistency into the cleansing procedures. Automated pipelining implements the identical cleansing logic again and again, thereby making the outcome reproducible and plausible.

- Time and Useful resource Effectivity: Making ready the information can take between 70-80% of the time of an information scientist. Pipelines automate their knowledge cleansing course of, largely lowering this overhead, channeling the staff in the direction of the evaluation and modeling.

- Scalability: As an illustration, as knowledge volumes develop, guide cleansing turns into untenable. Pipelines optimize the processing of enormous datasets and address rising knowledge masses virtually routinely.

- Error Discount: Automated validation picks up knowledge high quality points that guide inspection might miss, therefore lowering the danger of drawing incorrect conclusions from falsified knowledge.

- Audit Path: Pipelines in place define for you exactly what steps have been adopted to scrub the information, which might be very instrumental in terms of regulatory compliance and debugging.

Setting Up the Improvement Setting

Earlier than embarking upon the pipeline constructing, allow us to ensure that we’ve all of the instruments. Our pipeline shall benefit from the Python powerhouse libraries:

import pandas as pd

import numpy as np

from datetime import datetime

import logging

from typing import Dict, Checklist, Any, Non-obligatoryWhy these Libraries?

The next libraries will likely be used within the code, adopted by the utility they supply:

- pandas: Robustly manipulates and analyzes knowledge

- numpy: Gives quick numerical operations and array dealing with

- datetime: Validates and codecs dates and instances

- logging: Allows monitoring of pipeline execution and errors for debugging

- typing: Just about provides sort hints for code documentation and avoidance of frequent errors

Defining the Validation Schema

A validation schema is actually the blueprint defining the expectations of knowledge as to the construction they’re primarily based and the constraints they observe. Our schema is to be outlined as:

VALIDATION_SCHEMA = {

'user_id': {'sort': int, 'required': True, 'min_value': 1},

'electronic mail': {'sort': str, 'required': True, 'sample': r'^[^@]+@[^@]+.[^@]+$'},

'age': {'sort': int, 'required': False, 'min_value': 0, 'max_value': 120},

'signup_date': {'sort': 'datetime', 'required': True},

'rating': {'sort': float, 'required': False, 'min_value': 0.0, 'max_value': 100.0}

}The schema specifies a lot of validation guidelines:

- Sort validation: Checks the information sort of the acquired worth for each discipline

- Required-field validation: Identifies obligatory fields that should not be lacking

- Vary validation: Units the minimal and most acceptable type of worth

- Sample validation: Common expressions for validation functions, for instance, legitimate electronic mail addresses

- Date validation: Checks whether or not the date discipline incorporates legitimate datetime objects

Constructing the Pipeline Class

Our pipeline class will act as an orchestrator that coordinates all operations of cleansing and validation:

class DataCleaningPipeline:

def __init__(self, schema: Dict[str, Any]):

self.schema = schema

self.errors = []

self.cleaned_rows = 0

self.total_rows = 0

# Setup logging

logging.basicConfig(stage=logging.INFO)

self.logger = logging.getLogger(__name__)

def clean_and_validate(self, df: pd.DataFrame) -> pd.DataFrame:

"""Principal pipeline orchestrator"""

self.total_rows = len(df)

self.logger.information(f"Beginning pipeline with {self.total_rows} rows")

# Pipeline phases

df = self._handle_missing_values(df)

df = self._validate_data_types(df)

df = self._apply_constraints(df)

df = self._remove_outliers(df)

self.cleaned_rows = len(df)

self._generate_report()

return dfThe pipeline follows a scientific method:

- Initialize monitoring variables to observe cleansing progress

- Arrange logging to seize pipeline execution particulars

- Execute cleansing phases in a logical sequence

- Generate stories summarizing the cleansing outcomes

Writing the Information Cleansing Logic

Let’s implement every cleansing stage with strong error dealing with:

Lacking Worth Dealing with

The next code will drop rows with lacking required fields and fill lacking non-compulsory fields utilizing median (for numerics) or ‘Unknown’ (for non-numerics).

def _handle_missing_values(self, df: pd.DataFrame) -> pd.DataFrame:

"""Deal with lacking values primarily based on discipline necessities"""

for column, guidelines in self.schema.gadgets():

if column in df.columns:

if guidelines.get('required', False):

# Take away rows with lacking required fields

missing_count = df[column].isnull().sum()

if missing_count > 0:

self.errors.append(f"Eliminated {missing_count} rows with lacking {column}")

df = df.dropna(subset=[column])

else:

# Fill non-compulsory lacking values

if df[column].dtype in ['int64', 'float64']:

df[column].fillna(df[column].median(), inplace=True)

else:

df[column].fillna('Unknown', inplace=True)

return dfInformation Sort Validation

The next code converts columns to specified sorts and removes rows the place conversion fails.

def _validate_data_types(self, df: pd.DataFrame) -> pd.DataFrame:

"""Convert and validate knowledge sorts"""

for column, guidelines in self.schema.gadgets():

if column in df.columns:

expected_type = guidelines['type']

attempt:

if expected_type == 'datetime':

df[column] = pd.to_datetime(df[column], errors="coerce")

elif expected_type == int:

df[column] = pd.to_numeric(df[column], errors="coerce").astype('Int64')

elif expected_type == float:

df[column] = pd.to_numeric(df[column], errors="coerce")

# Take away rows with conversion failures

invalid_count = df[column].isnull().sum()

if invalid_count > 0:

self.errors.append(f"Eliminated {invalid_count} rows with invalid {column}")

df = df.dropna(subset=[column])

besides Exception as e:

self.logger.error(f"Sort conversion error for {column}: {e}")

return dfIncluding Validation with error monitoring

Our constraint validation system assures that the information is inside limits and the format is appropriate:

def _apply_constraints(self, df: pd.DataFrame) -> pd.DataFrame:

"""Apply field-specific constraints"""

for column, guidelines in self.schema.gadgets():

if column in df.columns:

initial_count = len(df)

# Vary validation

if 'min_value' in guidelines:

df = df[df[column] >= guidelines['min_value']]

if 'max_value' in guidelines:

df = df[df[column] <= guidelines['max_value']]

# Sample validation for strings

if 'sample' in guidelines and df[column].dtype == 'object':

import re

sample = re.compile(guidelines['pattern'])

df = df[df[column].astype(str).str.match(sample, na=False)]

removed_count = initial_count - len(df)

if removed_count > 0:

self.errors.append(f"Eliminated {removed_count} rows failing {column} constraints")

return dfConstraint-Primarily based & Cross-Subject Validation

Superior validation is normally wanted when relations between a number of fields are thought of:

def _cross_field_validation(self, df: pd.DataFrame) -> pd.DataFrame:

"""Validate relationships between fields"""

initial_count = len(df)

# Instance: Signup date shouldn't be sooner or later

if 'signup_date' in df.columns:

future_signups = df['signup_date'] > datetime.now()

df = df[~future_signups]

eliminated = future_signups.sum()

if eliminated > 0:

self.errors.append(f"Eliminated {eliminated} rows with future signup dates")

# Instance: Age consistency with signup date

if 'age' in df.columns and 'signup_date' in df.columns:

# Take away information the place age appears inconsistent with signup timing

suspicious_age = (df['age'] < 13) & (df['signup_date'] < datetime(2010, 1, 1))

df = df[~suspicious_age]

eliminated = suspicious_age.sum()

if eliminated > 0:

self.errors.append(f"Eliminated {eliminated} rows with suspicious age/date mixtures")

return dfOutlier Detection and Removing

The consequences of outliers will be excessive on the outcomes of the evaluation. The pipeline has a complicated methodology for detecting such outliers:

def _remove_outliers(self, df: pd.DataFrame) -> pd.DataFrame:

"""Take away statistical outliers utilizing IQR methodology"""

numeric_columns = df.select_dtypes(embrace=[np.number]).columns

for column in numeric_columns:

if column in self.schema:

Q1 = df[column].quantile(0.25)

Q3 = df[column].quantile(0.75)

IQR = Q3 - Q1

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

outliers = (df[column] < lower_bound) | (df[column] > upper_bound)

outlier_count = outliers.sum()

if outlier_count > 0:

df = df[~outliers]

self.errors.append(f"Eliminated {outlier_count} outliers from {column}")

return dfOrchestrating the Pipeline

Right here’s our full, compact pipeline implementation:

class DataCleaningPipeline:

def __init__(self, schema: Dict[str, Any]):

self.schema = schema

self.errors = []

self.cleaned_rows = 0

self.total_rows = 0

logging.basicConfig(stage=logging.INFO)

self.logger = logging.getLogger(__name__)

def clean_and_validate(self, df: pd.DataFrame) -> pd.DataFrame:

self.total_rows = len(df)

self.logger.information(f"Beginning pipeline with {self.total_rows} rows")

# Execute cleansing phases

df = self._handle_missing_values(df)

df = self._validate_data_types(df)

df = self._apply_constraints(df)

df = self._remove_outliers(df)

self.cleaned_rows = len(df)

self._generate_report()

return df

def _generate_report(self):

"""Generate cleansing abstract report"""

self.logger.information(f"Pipeline accomplished: {self.cleaned_rows}/{self.total_rows} rows retained")

for error in self.errors:

self.logger.warning(error)Instance Utilization

Let’s see an indication of a pipeline in motion with an actual dataset:

# Create pattern problematic knowledge

sample_data = pd.DataFrame({

'user_id': [1, 2, None, 4, 5, 999999],

'electronic mail': ['[email protected]', 'invalid-email', '[email protected]', None, '[email protected]', '[email protected]'],

'age': [25, 150, 30, -5, 35, 28], # Accommodates invalid ages

'signup_date': ['2023-01-15', '2030-12-31', '2022-06-10', '2023-03-20', 'invalid-date', '2023-05-15'],

'rating': [85.5, 105.0, 92.3, 78.1, -10.0, 88.7] # Accommodates out-of-range scores

})

# Initialize and run pipeline

pipeline = DataCleaningPipeline(VALIDATION_SCHEMA)

cleaned_data = pipeline.clean_and_validate(sample_data)

print("Cleaned Information:")

print(cleaned_data)

print(f"nCleaning Abstract: {pipeline.cleaned_rows}/{pipeline.total_rows} rows retained")Output:

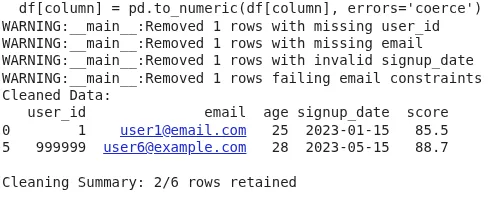

The output reveals the ultimate cleaned DataFrame after dropping rows with lacking required fields, invalid knowledge sorts, constraint violations (like out-of-range values or dangerous emails), and outliers. The abstract line stories what number of rows have been retained out of the overall. This ensures solely legitimate, analysis-ready knowledge strikes ahead, enhancing high quality, lowering errors, and making your pipeline dependable and reproducible.

Extending the Pipeline

Our pipeline has been made extensible. Under are some concepts for enhancement:

- Customized Validation Guidelines: Incorporate domain-specific validation logic by extending the schema format to just accept customized validation features.

- Parallel Processing: Course of massive datasets in parallel throughout a number of CPU cores utilizing applicable libraries comparable to multiprocessing.

- Machine Studying Integration: Herald anomaly detection fashions for detecting knowledge high quality points too intricate for rule-based methods.

- Actual-time Processing: Modify the pipeline for streaming knowledge with Apache Kafka or Apache Spark Streaming.

- Information High quality Metrics: Design a broad high quality rating that components a number of dimensions comparable to completeness, accuracy, consistency, and timeliness.

Conclusion

The notion of this kind of cleansing and validation is to test the information for all the weather that may be errors: lacking values, invalid knowledge sorts or constraints, outliers, and, in fact, report all this info with as a lot element as potential. This pipeline then turns into your place to begin for data-quality assurance in any kind of knowledge evaluation or machine-learning job. A number of the advantages you get from this method embrace automated QA checks so no errors go unnoticed, reproducible outcomes, thorough error monitoring, and easy set up of a number of checks with specific area constraints.

By deploying pipelines of this kind in your knowledge workflows, your data-driven selections will stand a far larger probability of being right and exact. Information cleansing is an iterative course of, and this pipeline will be prolonged in your area with additional validation guidelines and cleansing logic as new knowledge high quality points come up. Such a modular design permits new options to be built-in with out clashes with at present carried out ones.

Regularly Requested Questions

A. It’s an automatic workflow that detects and fixes lacking values, sort mismatches, constraint violations, and outliers to make sure solely clear knowledge reaches evaluation or modeling.

A. Pipelines are quicker, constant, reproducible, and fewer error-prone than guide strategies, particularly essential when working with massive datasets.

A. Rows with lacking required fields or failed validations are dropped. Non-obligatory fields get default values like medians or “Unknown”.

Login to proceed studying and luxuriate in expert-curated content material.