Black Forest Labs’ FLUX.1 Kontext [dev] picture modifying mannequin is now obtainable as an NVIDIA NIM microservice.

FLUX.1 fashions enable customers to edit present pictures with easy language, with out the necessity for fine-tuning or advanced workflows.

Deploying highly effective AI requires curation of mannequin variants, adaptation to handle all enter and output knowledge, and quantization to cut back VRAM necessities. Fashions have to be transformed to work with optimized inference backend software program and related to new AI utility programming interfaces.

The FLUX.1 Kontext [dev] NIM microservice simplifies this course of, unlocking sooner generative AI workflows, and is optimized for RTX AI PCs.

Generative AI in Kontext

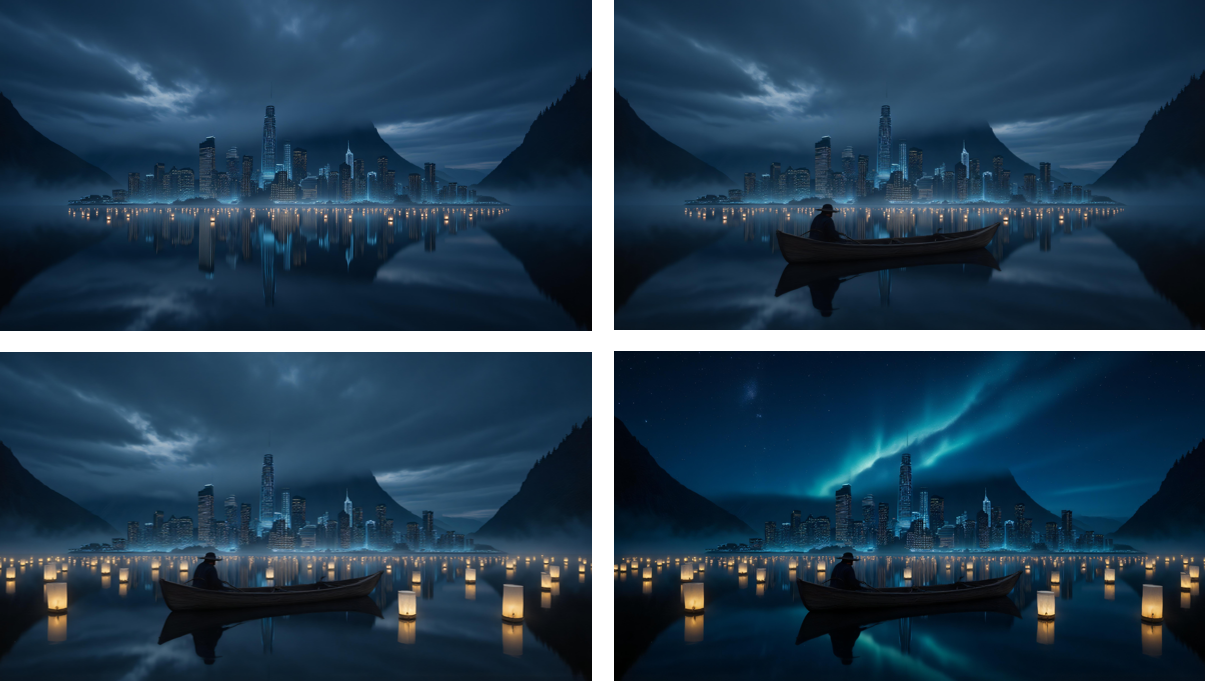

FLUX.1 Kontext [dev] is an open-weight generative mannequin constructed for picture modifying. It contains a guided, step-by-step era course of that makes it simpler to manage how a picture evolves, whether or not refining small particulars or remodeling a complete scene.

As a result of the mannequin accepts each textual content and picture inputs, customers can simply reference a visible idea and information the way it evolves in a pure and intuitive manner. This allows coherent, high-quality picture edits that keep true to the unique idea.

The FLUX.1 Kontext [dev] NIM microservice gives prepackaged, optimized information which are prepared for one-click obtain by ComfyUI NIM nodes — making them simply accessible to customers.

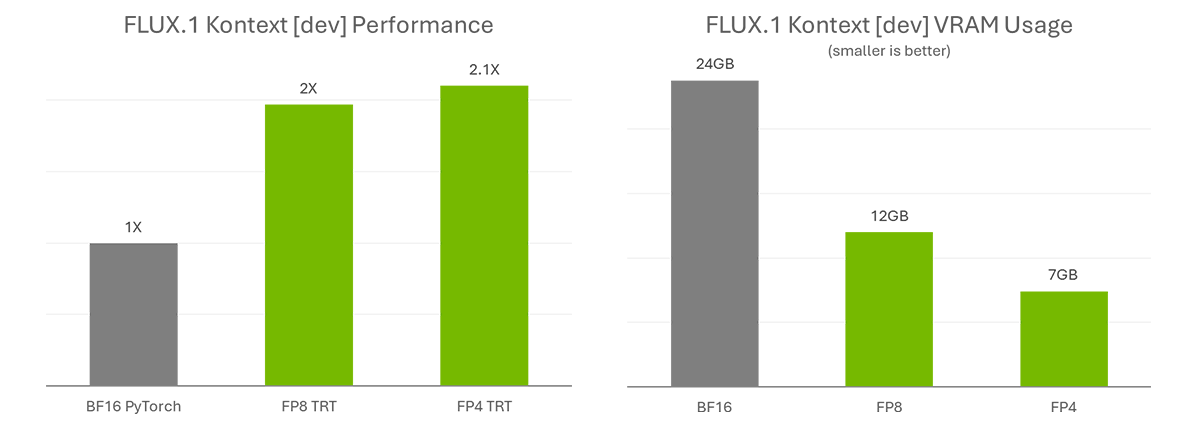

NVIDIA and Black Forest Labs labored collectively to quantize FLUX.1 Kontext [dev], decreasing the mannequin dimension from 24GB to 12GB for FP8 (NVIDIA Ada Era GPUs) and 7GB for FP4 (NVIDIA Blackwell structure). The FP8 checkpoint is optimized for GeForce RTX 40 Collection GPUs, which have FP8 accelerators of their Tensor Cores. The FP4 checkpoint is optimized for GeForce RTX 50 Collection GPUs and makes use of a brand new technique known as SVDQuant, which preserves picture high quality whereas decreasing mannequin dimension.

As well as, NVIDIA TensorRT — a framework to entry the Tensor Cores in NVIDIA RTX GPUs for optimum efficiency — gives over 2x acceleration in contrast with operating the unique BF16 mannequin with PyTorch.

These dramatic efficiency positive aspects have been beforehand restricted to AI specialists and builders with superior AI infrastructure data. With the FLUX.1 Kontext [dev] NIM microservice, even lovers can obtain these time financial savings with better efficiency.

Get NIMble

FLUX.1 Kontext [dev] is on the market on Hugging Face with TensorRT optimizations and ComfyUI.

To get began, observe the instructions on ComfyUI’s NIM nodes GitHub:

- Set up NVIDIA AI Workbench.

- Get ComfyUI.

- Set up NIM nodes by the ComfyUI Supervisor throughout the app.

- Settle for the mannequin licenses on Black Forest Labs’ FLUX.1 Kontext’s [dev] Hugging Face.

- The node will put together the specified workflow and assist with downloading all needed fashions after clicking “Run.”

NIM microservices are optimized for efficiency on NVIDIA GeForce RTX and RTX PRO GPUs and embody fashionable fashions from the AI neighborhood. Discover NIM microservices on GitHub and construct.nvidia.com.

Every week, the RTX AI Storage weblog collection options community-driven AI improvements and content material for these seeking to be taught extra about NVIDIA NIM microservices and AI Blueprints, in addition to constructing AI brokers, inventive workflows, productiveness apps and extra on AI PCs and workstations.

Plug in to NVIDIA AI PC on Fb, Instagram, TikTok and X — and keep knowledgeable by subscribing to the RTX AI PC e-newsletter. Be a part of NVIDIA’s Discord server to attach with neighborhood builders and AI lovers for discussions on what’s attainable with RTX AI.

Observe NVIDIA Workstation on LinkedIn and X.

See discover relating to software program product data.