Constructing purposes with giant language fashions (LLMs) is thrilling, because it lets us create sensible, interactive methods. Nonetheless, making these apps extra complicated brings alongside challenges, particularly when a number of LLMs work collectively. So, how can we handle the move of data between them? How can we be sure that they work easily and perceive the duty? LangGraph is the reply to all such questions. This free tutorial is an effective way for inexperienced persons to grasp how LangGraph can resolve these issues. With hands-on examples and full code, this information will train you find out how to handle a number of LLMs successfully, making your purposes extra highly effective and environment friendly.

Understanding LangGraph

LangGraph is a strong library, which is part of LangChain instruments. It helps streamline the mixing of LLMs, making certain they work collectively seamlessly to grasp and execute duties. It presents a neat approach to construct and deal with LLM apps with many brokers.

LangGraph lets builders arrange how a number of LLM brokers discuss to one another. It exhibits these workflows as graphs with cycles. This helps in protecting the communication clean and performing complicated duties effectively. LangGraph is greatest when utilizing Directed Acyclic Graphs (DAGs) for straight line duties. However since it’s cyclic and provides the flexibility to loop again, it permits for extra complicated and versatile methods. It’s like how a sensible agent would possibly rethink issues and use new data to replace responses or change its selections.

Additionally Learn: What’s LangGraph?

Key Ideas of LangGraph

Listed below are among the key ideas of LangGraph that that you must know:

1. Graph Buildings

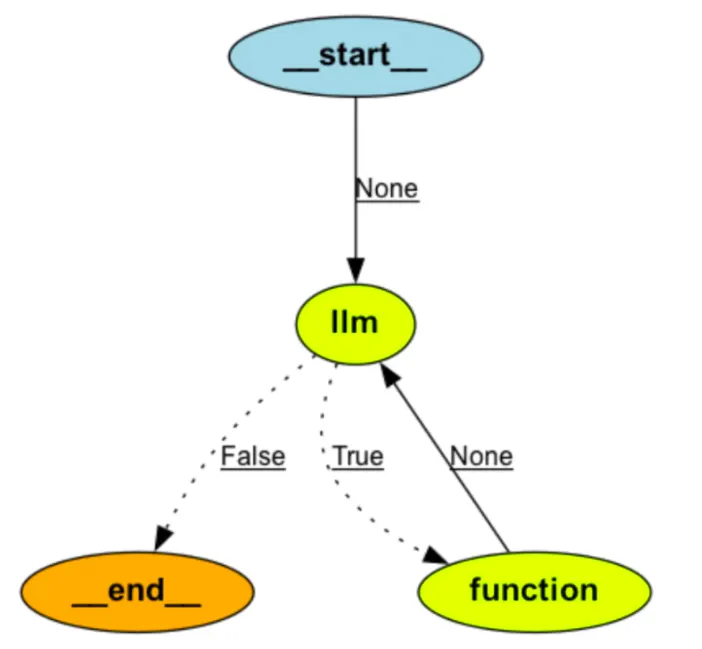

LangGraph’s core thought is utilizing a graph for the appliance’s workflow. This graph has two predominant elements – nodes and edges.

- Nodes: Nodes are the elemental constructing blocks representing discrete items of labor or computation inside the workflow. Every node is a Python operate that processes the present state and returns an up to date state. Nodes can carry out duties comparable to calling an LLM and interacting with instruments or APIs for manipulating information.

- Edges: Edges join nodes and outline the move of execution. They are often:

- Easy edges: Direct, unconditional transitions from one node to a different.

- Conditional edges: Branching logic that directs move based mostly on node outputs, much like if-else statements. This permits dynamic decision-making inside the workflow.

2. State Administration

Preserving monitor of what’s occurring is significant when you will have many brokers. All brokers have to know the present standing of the duty. LangGraph handles this by managing the state routinely. The library retains monitor of and updates a predominant state object. It does this because the brokers do their jobs. The state object holds essential data. It’s obtainable at completely different factors within the workflow. This might embody the chat historical past.

In a chatbot, the state can save the dialog. This helps the bot reply utilizing what was mentioned earlier than. It could possibly additionally retailer context information, like person likes, previous actions, and so on. or exterior information. Brokers can use this for making selections. Inside variables may also be stored right here. Brokers would possibly use the state to trace flags, counts, or different values. These assist information their actions and choices.

3. Multi-agent Techniques

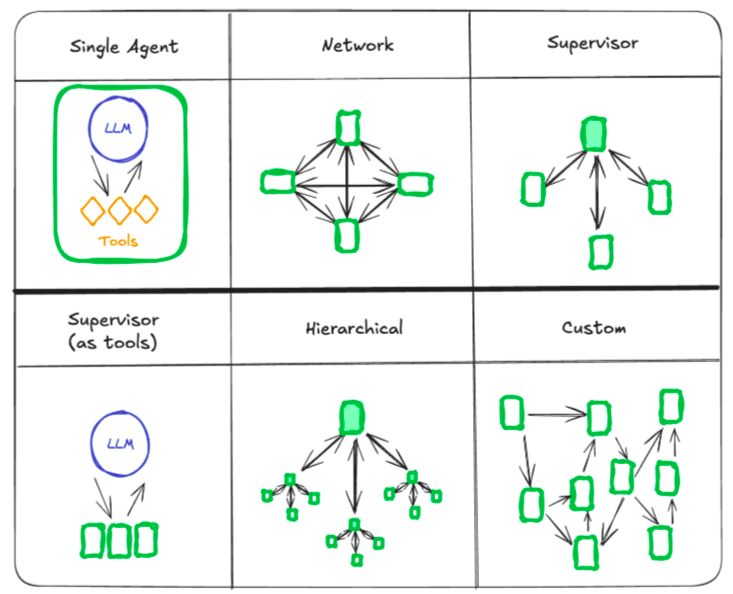

A multi-agent system consists of a number of impartial brokers that work collectively or compete to realize a typical objective. These brokers use LLMs to make choices and management the move of an software. The complexity of a system can develop as extra brokers and duties are added. This may increasingly result in challenges like poor choice making, context administration, and the necessity for specialization. A multi-agent system solves these issues by breaking the system into smaller brokers, every specializing in a particular activity, comparable to planning or analysis.

The primary advantages of utilizing a multi-agent system is modularity, specialization, and management. Modularity is for straightforward growth, testing and upkeep, whereas specialization ensures that knowledgeable brokers enhance total efficiency. Management ensures that you would be able to clearly inform how the brokers ought to talk.

Architectures in Multi-agent Techniques

Listed below are the assorted varieties of architectures adopted in multi-agent methods.

1. Community Structure: On this structure, each agent communicates with each different agent, and every can then resolve which agent they need to name subsequent. That is very useful when there isn’t a clear sequence of operations. Beneath is a straightforward instance of the way it works utilizing StateGraph.

from langchain_openai import ChatOpenAI

from langgraph.sorts import Command

from langgraph.graph import StateGraph

mannequin = ChatOpenAI()

def agent_1(state) -> Command:

response = mannequin.invoke(...)

return Command(goto=response["next_agent"], replace={"messages": [response["content"]]})

builder = StateGraph()

builder.add_node(agent_1)

builder.compile()

2. Supervisor Structure: A supervisor agent controls the choice making course of and routes duties to the suitable brokers. Right here’s a pattern of the way it’s completed:

def supervisor(state) -> Command:

response = mannequin.invoke(...)

return Command(goto=response["next_agent"])

builder = StateGraph()

builder.add_node(supervisor)

builder.compile()3. Supervisor with Instrument-calling: On this structure, a supervisor agent makes use of a tool-calling agent to resolve which device (or agent) to make use of. The device executes duties and returns outcomes that information the subsequent management move choice.. A typical sample right here is to have a tool-wrapped operate:

def agent_1(state):

response = mannequin.invoke(...)

return response.content material4. Hierarchical Structure: This method addresses the complexity of multi-agent methods by organizing brokers into groups, every with its personal supervisor. The highest-level supervisor directs which workforce to name. As an example:

def top_level_supervisor(state):

response = mannequin.invoke(...)

return Command(goto=response["next_team"])

builder = StateGraph()

builder.add_node(top_level_supervisor)

builder.compile()5. Handoffs in Multi-agent Techniques: Handoffs enable one agent to move management to a different, facilitating a move from one agent to the subsequent. Every agent returns a Command object that specifies the subsequent agent to name and ship any updates to the state.

def agent(state) -> Command:

goto = get_next_agent(...)

return Command(goto=goto, replace={"my_state_key": "my_state_value"})In complicated methods, brokers could also be nested inside subgraphs, the place a node in a subgraph can direct management to a different agent exterior its graph:

def some_node_inside_alice(state):

return Command(goto="bob", graph=Command.PARENT)Multi-agent methods allow modular and specialised designs the place brokers independently deal with duties and talk for environment friendly problem-solving. Architectures like community, supervisor, and hierarchical methods every serve particular wants, whereas handoffs guarantee clean transitions between brokers, sustaining flexibility and management.

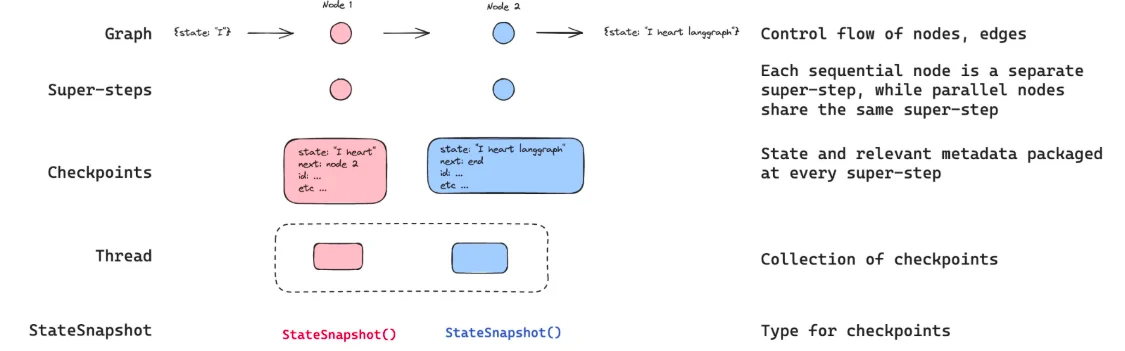

4. Persistence

Persistence means saving the progress of a course of so that you could come again to it later, even after some interruptions. Every step’s state is saved, which helps with error restoration. It helps human suggestions throughout runs. You can even replay steps to debug or strive new paths.

In LangGraph, persistence is completed utilizing checkpointers. Right here, the graph’s state is saved after each main step and every saved state known as a checkpoint. All of the checkpoints are grouped inside a thread (the dialog historical past for a specific run).

Checkpointing is completed routinely and also you don’t all the time have to configure it manually. A checkpoint is sort of a snapshot of the graph’s state that features:

- config: Configuration information used throughout that step

- metadata: Step particulars (e.g., which node is operating)

- values: The precise state values at that time

- subsequent: The subsequent node(s) that will probably be run

- duties: Data on what’s coming or errors

Every graph, whereas execution, wants a thread ID to group its checkpoints. You may present this thread id utilizing config: Beneath is a pattern of how it may be completed:

config = {"configurable": {"thread_id": "1"}}

To fetch the newest state inside a thread, use the beneath code:

graph.get_state({"configurable": {"thread_id": "1"}})

The beneath code exhibits how one can get a particular checkpoint:

graph.get_state({

"configurable": {

"thread_id": "1",

"checkpoint_id": "your_checkpoint_id"

}

})

To get the state historical past or fetch all earlier states, use this code:

historical past = graph.get_state_history({"configurable": {"thread_id": "1"}})You can even replace or edit the state manually at any level, utilizing:

graph.update_state(

config={"configurable": {"thread_id": "1"}},

values={"foo": "new_value"}

)

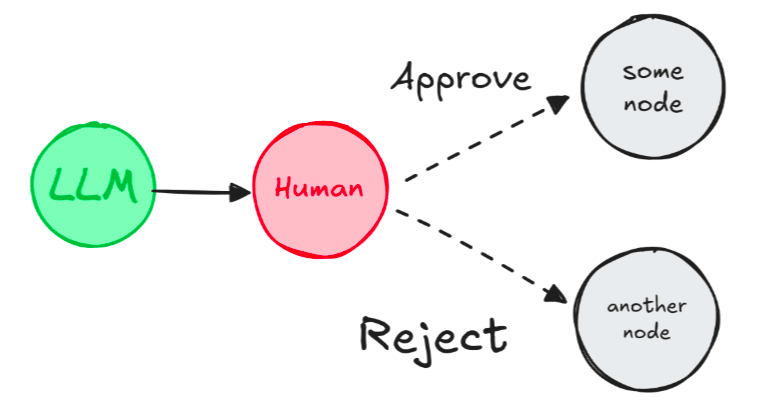

5. Human-in-the-Loop Integration

Human-in-the-loop allows you to add human suggestions at key steps of an automatic LangGraph workflow. That is essential in sure duties since LLMs might generate unsure or dangerous outputs comparable to in device calls, content material era, or decision-making. LangGraph’s interrupt() operate makes this attainable by pausing the graph, surfacing information to a human, and resuming with their enter utilizing the Command(resume=worth) technique. This allows evaluate, correction, or information entry.

Human-in-the-loop helps patterns like Approve/Reject, Edit State, Present Enter, or Multi-turn Conversations. To make use of it, outline a checkpointer and add an interrupt() inside a node. You may resume the graph utilizing Command after human enter.

Beneath is a pattern of how you should utilize Human-in-the-loop in LangGraph.

from langgraph.sorts import interrupt, Command

def human_node(state):

worth = interrupt({"text_to_revise": state["some_text"]})

return {"some_text": worth}

graph = graph_builder.compile(checkpointer=checkpointer)

graph.invoke(some_input, config={"configurable": {"thread_id": "some_id"}})

graph.invoke(Command(resume="Edited textual content"), config={"configurable": {"thread_id": "some_id"}})This retains workflows interactive, auditable, and correct excellent for high-stakes or collaborative AI use circumstances.

6. Streaming

LangGraph streams outputs as they’re created which lets customers see outcomes quicker. This improves their expertise with LLMs. Streaming helps you construct responsive apps by exhibiting you real-time progress. There are 3 predominant information sorts to stream: workflow progress, LLM tokens, and customized updates.

Use .stream() (sync) or .astream() (async) to stream outputs. You may set stream_mode to regulate what you get:

- “values”: full state after every graph step

- “updates”: modifications solely after every node

- “customized”: any customized information you log in a node

- “messages”: LLM token stream with metadata

- “debug”: all information all through the run

You may move a number of modes like this:

for stream_type, information in graph.stream(inputs, stream_mode=["updates", "messages"]):

if stream_type == "messages":

print(information[0].content material) # AIMessageChunk

elif stream_type == "updates":

print(information) # State replace

Use .astream_events() if you need a full occasion stream. That is excellent when migrating huge apps.

Professional tip: For real-time UI suggestions, use “messages” for token-wise streaming and “updates” for backend state.

Why Use LangGraph?

LangGraph is good for builders constructing sensible and versatile AI brokers. Right here’s why:

- Dependable and controllable: Add moderation checks and human approvals. It retains context alive for lengthy duties.

- Customized and extensible: Use low-level instruments to construct brokers your approach. Design methods with brokers that every play a particular position.

- Nice streaming: See every token and step stay, monitoring agent considering because it occurs.

You can even take the course from the Langchain academy.

Constructing the Easiest Graph

Now that we have now seen the important thing elements of LangGraph, let’s attempt to construct a primary graph with three nodes and one conditional edge. This easy instance exhibits find out how to invoke a graph involving the important thing ideas of State, Nodes, and Edges.

Step 1: Outline the Graph State

The State defines the information construction which is shared between nodes. It acts like a shared reminiscence that flows via the graph.

from typing_extensions import TypedDict

class State(TypedDict):

graph_state: strRight here, we have now used python’s TypeDict to declare that our state may have a single key referred to as the graph_state, which shops a string.

Step 2: Create the Nodes

Nodes are simply easy Python capabilities. Each takes within the present state, modifies it, and returns the up to date state.

def node_1(state):

print("---Node 1---")

return {"graph_state": state['graph_state'] + " I'm"}This operate appends “I’m” to no matter string is in graph_state.

def node_2(state):

print("---Node 2---")

return {"graph_state": state['graph_state'] + " extraordinarily completely happy!"}

def node_3(state):

print("---Node 3---")

return {"graph_state": state['graph_state'] + " extraordinarily unhappy!"}Right here, these two nodes add an emotional tone of “completely happy!” or “unhappy!” to the sentence.

Step 3: Add Conditional Logic

Generally you need dynamic habits, the place the subsequent step is dependent upon logic or randomness. That’s what conditional edges allow.

import random

from typing import Literal

def decide_mood(state) -> Literal["node_2", "node_3"]:

if random.random() < 0.5:

return "node_2"

return "node_3"

This operate randomly picks between node_2 and node_3 with equal likelihood, simulating a easy temper selector.

Step 4: Assemble the Graph

Let’s carry all of it collectively utilizing LangGraph’s StateGraph class. That is the place we outline the complete graph construction.

from IPython.show import Picture, show

from langgraph.graph import StateGraph, START, END

# Initialize the graph with the state schema

builder = StateGraph(State)

# Add nodes to the graph

builder.add_node("node_1", node_1)

builder.add_node("node_2", node_2)

builder.add_node("node_3", node_3)We begin with the START node and path to node_1. Then, we add a conditional edge from node_1 utilizing decide_mood. After that, the graph continues to both node_2 or node_3 and ends on the END node.

# Add edges to outline move

builder.add_edge(START, "node_1")

builder.add_conditional_edges("node_1", decide_mood)

builder.add_edge("node_2", END)

builder.add_edge("node_3", END)

# Compile and visualize the graph

graph = builder.compile()

show(Picture(graph.get_graph().draw_mermaid_png()))

The compile() technique performs primary validation, and draw_mermaid_png() allows you to visualize the graph as a Mermaid diagram.

Step 5: Invoke the Graph

Lastly, we are able to run the graph utilizing the invoke() technique.

graph.invoke({"graph_state" : "Hello, that is Janvi."})

This begins the graph on the START node and initializes graph_state with the sentence “Hello, that is Janvi.”.

- node_1 appends ” I’m” → “Hello, that is Janvi.”

- decide_mood randomly chooses the trail

- node_2 or node_3 appends both ” extraordinarily completely happy!” or ” extraordinarily unhappy!”

Output:

This output exhibits how state flows and updates via every step of the graph.

Constructing a Help Chatbot with LangGraph Utilizing OpenAI

Now that we have now constructed the best graph within the above part, on this part, I’ll present you find out how to use LangGraph to construct a assist chatbot, beginning with primary performance and progressively including options like internet search, reminiscence, and human-in-loop. Alongside the way in which, we’ll see the core LangGraph ideas as effectively.

Our objective right here is to create a chatbot that may reply questions utilizing internet search, keep in mind previous conversations, ask a human for assist when wanted, use a customized state for habits, and rewind dialog paths (enabled by checkpointing).

Setup

Earlier than constructing the chatbot, let’s set up the required packages.

!pip set up -U langgraph langchain openaiThis command installs:

- LangGraph: For constructing the graph construction.

- LangChain: For interacting with OpenAI’s language fashions.

- OpenAI: For utilizing OpenAI’s fashions (like GPT-4).

We have to securely present the OpenAI API key so the appliance can authenticate and use the GPT fashions. This operate prompts for the important thing if it’s not already set within the atmosphere.

import getpass

import os

def _set_env(var: str):

if not os.environ.get(var):

os.environ[var] = getpass.getpass(f"{var}: ")

_set_env("OPENAI_API_KEY")

Half 1: Construct a Fundamental Chatbot

We’ll begin by creating the best type of the chatbot.

1. Outline State

The state defines the information construction that will get handed between nodes within the graph. Right here, we outline a state with a single key, messages, which can maintain the checklist of dialog messages.

from typing import Annotated

from typing_extensions import TypedDict

from langgraph.graph import StateGraph, START, END

from langgraph.graph.messae import add_messages

class State(TypedDict):

# 'messages' holds the checklist of chat messages.

# 'add_messages' ensures new messages are added, not changed.

messages: Annotated[list, add_messages]

2. Create Graph Builder

The StateGraph object is the entry level for outlining the graph construction. It’s initialized with the State definition we simply created.

graph_builder = StateGraph(State)

3. Add Chatbot Node

We outline a Python operate chatbot that takes the present state, invokes OpenAI’s GPT mannequin with the messages from the state, and returns the LLM’s response as an replace to the messages key within the state.

import openai

# Initialize OpenAI GPT mannequin

openai.api_key = os.environ["OPENAI_API_KEY"]

def chatbot(state: State):

response = openai.Completion.create(

mannequin="gpt-4", # You can even use "gpt-3.5-turbo" or every other OpenAI mannequin

immediate=state["messages"],

max_tokens=150

)

return {"messages": [response.choices[0].textual content.strip()]}

graph_builder.add_node("chatbot", chatbot)

4. Set Entry and Exit Factors

Outline the entry level (START) and exit level (END) for the graph execution.

graph_builder.add_edge(START, "chatbot")

graph_builder.add_edge("chatbot", END)

5. Compile the Graph

As soon as all nodes and edges are outlined, compile the graph construction.

graph = graph_builder.compile()

6. Visualize (Non-compulsory)

LangGraph permits visualizing the compiled graph construction. This helps perceive the move of execution. We are able to visualize the graph utilizing instruments like pygraphviz or mermaid.

from IPython.show import Picture, show

strive:

show(Picture(graph.get_graph().draw_mermaid_png()))

besides Exception:

move # Non-compulsory visualization

7. Run the Chatbot

Arrange a loop to work together with the chatbot. It takes person enter, packages it into the anticipated State format ({“messages”: […]}), and makes use of graph.stream to execute the graph. The stream technique returns occasions because the graph progresses, and we print the assistant’s closing message.

def stream_graph_updates(user_input: str):

for occasion in graph.stream({"messages": [{"role": "user", "content": user_input}]}):

for worth in occasion.values():

print("Assistant:", worth["messages"][-1].content material)

# Loop to talk with the bot

whereas True:

strive:

user_input = enter("Consumer: ")

if user_input.decrease() in ["quit", "exit", "q"]:

print("Goodbye!")

break

stream_graph_updates(user_input)

besides: # Fallback for environments with out enter()

user_input = "What are you aware about LangGraph?"

print("Consumer: " + user_input)

stream_graph_updates(user_input)

break

Half 2: Enhancing the Chatbot with Instruments

To make the chatbot extra educated, particularly about current data, we’ll combine an internet search device (Tavily). This includes enabling the LLM to request device utilization and including graph elements to deal with the execution of those instruments.

1. Set up Instrument Necessities

Set up the required library for the Tavily search device.

%pip set up -U tavily-python langchain_community

2. Set Instrument API Key

Configure the API key for the Tavily service.

_set_env("TAVILY_API_KEY") # Makes use of the operate outlined earlier

3. Outline the Instrument

Instantiate the TavilySearchResults device, which can return 2 outcomes. This device will probably be utilized by each the LLM and the graph.

from langchain_community.instruments.tavily_search import TavilySearchResults

# Create a Tavily search device occasion, limiting to 2 outcomes

device = TavilySearchResults(max_results=2)

instruments = [tool] # Checklist of instruments the bot can use

Half 3: Add Reminiscence to the Chatbot

To allow multi-turn conversations the place the bot remembers earlier messages, we introduce LangGraph’s checkpointing function.

Add Checkpointer

Use the MemorySaver checkpointer to retailer the dialog state in reminiscence. For manufacturing, you would possibly use a persistent backend like SQLite or Postgres.

from langgraph.checkpoint.reminiscence import MemorySaver

reminiscence = MemorySaver()

Half 4: Human-in-the-loop

Generally, the AI agent would possibly want human enter earlier than continuing. We obtain this by making a device that pauses the graph’s move.

Outline Human Help Instrument

from langchain_core.instruments import device

from langgraph.sorts import interrupt

@device

def human_assistance(question: str) -> str:

print(f"Pausing for human help concerning: {question}")

# interrupt pauses graph execution and waits for enter

human_response = interrupt({"question": question})

return human_response["data"]

This device pauses the graph and waits for human enter earlier than continuing.

Deploying Your LangGraph Purposes

Upon getting constructed your LangGraph software, the subsequent factor which that you must do is operating the app both in your native machine or cloud platforms for additional growth and testing. LangGraph supplies us with a number of deployment choices which may have completely different workflows and infrastructure.

For deployment, LangGraph helps a number of choices. The Cloud SaaS mannequin handles all the pieces for you. The Self-Hosted Knowledge Aircraft allows you to run apps in your personal cloud whereas utilizing LangChain’s management aircraft. With the Self-Hosted Management Aircraft, you handle all the pieces your self. Or go along with Standalone Containers for full flexibility utilizing Docker.

Use Instances of LangGraph

LangGraph is used to construct interactive and clever AI Brokers. Let’s discover and see a few of its use circumstances.

Improved Buyer Service: LangGraph is able to growing superior chatbots for buyer assist. These chatbots are capable of recall previous purchases and buyer preferences.With the recalled previous they will reply to the queries in regards to the order and might hyperlink to people when essential. With this the shopper’s downside will be solved quicker.

Analysis Assistant for AI: A analysis assistant may also be created utilizing LangGraph. It could possibly search for scholarly articles after which spotlight essential data. The assistant can then extract the knowledge and this data then can be utilized by researchers and college students to achieve extra insights from numerous fields.

Customized Studying: With LangGraph we are able to additionally construct personalised or personalized studying methods which can regulate the content material based mostly on the learner. This can assist the learner perceive the weaker space after which suggest sources based mostly on that. This creates a personalised studying expertise, bettering engagement and outcomes.

Streamlining Enterprise Duties: LangGraph also can assist us in automating enterprise processes. With this doc approval and challenge administration will be automated and in addition the agent may also be used to investigate information. Automation helps in rising productiveness and reduces human error, permitting groups to concentrate on higher-level duties.

Study Extra: Dynamic AI Workflows By LangGraph ReAct Perform Calling

Conclusion

On this LangGraph tutorial for inexperienced persons, you discovered find out how to construct interactive AI methods. These methods transcend easy Q&A bots. By LangGraph examples, we noticed how LangGraph manages state, integrates a number of brokers, and permits human enter. The information confirmed find out how to construct a assist chatbot that may deal with internet searches, keep in mind previous interactions, and even contain human intervention. The LangGraph tutorial for inexperienced persons is superb for builders. It helps create highly effective, AI-driven purposes. Through the use of LangGraph, we are able to construct versatile, adaptive methods that may deal with complicated duties. Whether or not you’re constructing a chatbot, analysis assistant, or personalised studying device, LangGraph has the construction and instruments you want for environment friendly growth.

Regularly Requested Questions

A. LangGraph is a strong library that permits builders to make complicated and superior AI brokers which may work together with giant language fashions. It additionally helps in managing workflow utilizing graph construction. With the assistance of this graph construction a number of brokers will be constructed to deal with complicated duties.

A. LangGraph works by defining workflows as graphs. The graph consists of nodes (duties or computations) and edges (connections between duties). It handles state administration, ensuring every agent has the knowledge it must carry out its activity and work together with different brokers.

A. LangGraph presents:

– State administration which retains monitor of knowledge because the agent performs duties.

– Multi-agent assist which permits a number of brokers to work collectively inside a graph.

– Persistence with checkpointers because it saves the state at every step which allow error restoration and debudding.

– Human-in-the-loop which helps in pausing the workflow for human evaluate and approval.

A. Sure, LangGraph will be very simply built-in with OpenAI’s GPT fashions. It permits us to construct purposes that use the facility of LLMs, comparable to chatbots and AI assistants, whereas managing complicated workflows and state throughout a number of brokers.

A. Sure, this LangGraph tutorial for inexperienced persons is designed that can assist you get began. It walks via key ideas with LangGraph examples and explains find out how to construct methods step-by-step. Moreover, the LangGraph tutorial for inexperienced persons free supplies sources for studying the framework for gratis.

Login to proceed studying and revel in expert-curated content material.