Ever thought that you can maintain a robust AI assistant in your pocket? Not simply an app however a sophisticated intelligence, configurable, non-public, and high-performance AI language mannequin? Meet Gemma 3n. This isn’t simply one other tech fad. It’s about placing a high-performance language mannequin straight in your arms, on the cellphone in your cellphone. Whether or not you might be developing with weblog concepts on the practice, translating messages on the go, or simply out to witness the way forward for AI, Gemma 3n provides you with a remarkably easy and intensely gratifying expertise. Let’s bounce in and see how one can make all of the AI magic occur in your cellular system, step-by-step.

What’s Gemma 3n?

Gemma 3n is a member of Google’s Gemma household of open fashions; it’s designed to run nicely on low-resourced units, equivalent to smartphones. With roughly 3 billion parameters, Gemma 3n presents a robust mixture between functionality and effectivity, and is an efficient choice for on-device AI work equivalent to sensible assistants, textual content processing, and extra.

Gemma 3n Efficiency and Benchmark

Gemma 3n, designed for velocity and effectivity on low-resource units, is a current addition to the household of Google’s open giant language fashions explicitly designed for cellular, pill and different edge {hardware}. Here’s a transient evaluation on real-world efficiency and benchmarks:

Mannequin Sizes & System Necessities

- Mannequin Sizes: E2B (5B parameters, efficient reminiscence an efficient 2B) and E4B (8B parameters, efficient reminiscence an efficient 4B).

- RAM Required: E2B runs on solely 2GB RAM; E4B wants solely 3GB RAM – nicely inside the capabilities of most fashionable smartphones and tablets.

Velocity & Latency

- Response Velocity: As much as 1.5x sooner than earlier on-device fashions for producing first response, often throughput is 60 to 70 tokens/second on current cellular processors.

- Startup & Inference: Time-to-first-token as little as 0.3 seconds permits chat and assistant functions to offer a extremely responsive expertise.

Benchmark Scores

- LMArena Leaderboard: E4B is the primary sub-10B parameter mannequin to surpass a rating of 1300+, outperforming equally sized native fashions throughout numerous duties.

- MMLU Rating: Gemma 3n E4B achieves ~48.8% (represents stable reasoning and normal information).

- Intelligence Index: Roughly 28 for E4B, aggressive amongst all native fashions underneath the 10B parameter measurement.

High quality & Effectivity Improvements

- Quantization: Helps each 4-bit and 8-bit quantized variations with minimal high quality loss, can run on units with as little as 2-3GB RAM.

- Multimodal: E4B mannequin can deal with textual content, photographs, audio, and even quick video on-device – consists of context window of as much as 32K tokens (nicely above most rivals in its measurement class).

- Optimizations: Leverages a number of strategies equivalent to Per-Layer Embeddings (PLE), selective activation of parameters, and makes use of MatFormer to maximise velocity, decrease RAM footprint, and generate good high quality output regardless of having a smaller footprint.

What Are the Advantages of Gemma 3n on Cellular?

- Privateness: The whole lot runs domestically, so your knowledge is saved non-public.

- Velocity: Processing on-device means higher response instances.

- Web Not Required: Cellular provides many capabilities even when there isn’t any lively web connection.

- Customization: Mix Gemma 3n together with your desired cellular apps or workflows.

Stipulations

A contemporary smartphone (Android or iOS), with sufficient storage and no less than 6GB RAM to enhance efficiency. Some primary information of putting in and utilizing cellular functions.

Step-by-Step Information to Run Gemma 3n on Cellular

Step 1: Choose the Acceptable Software or Framework

A number of apps and frameworks can assist operating giant language fashions equivalent to Gemma 3n on cellular units, together with:

- LM Studio: A preferred utility that may run fashions domestically through a easy interface.

- Mlc Chat (MLC LLM): An open-source utility that permits native LLM inference on each Android and iOS.

- Ollama Cellular: If it helps your platform.

- Customized Apps: Some apps can help you load and open fashions. (e.g., Hugging Face Transformers apps for cellular).

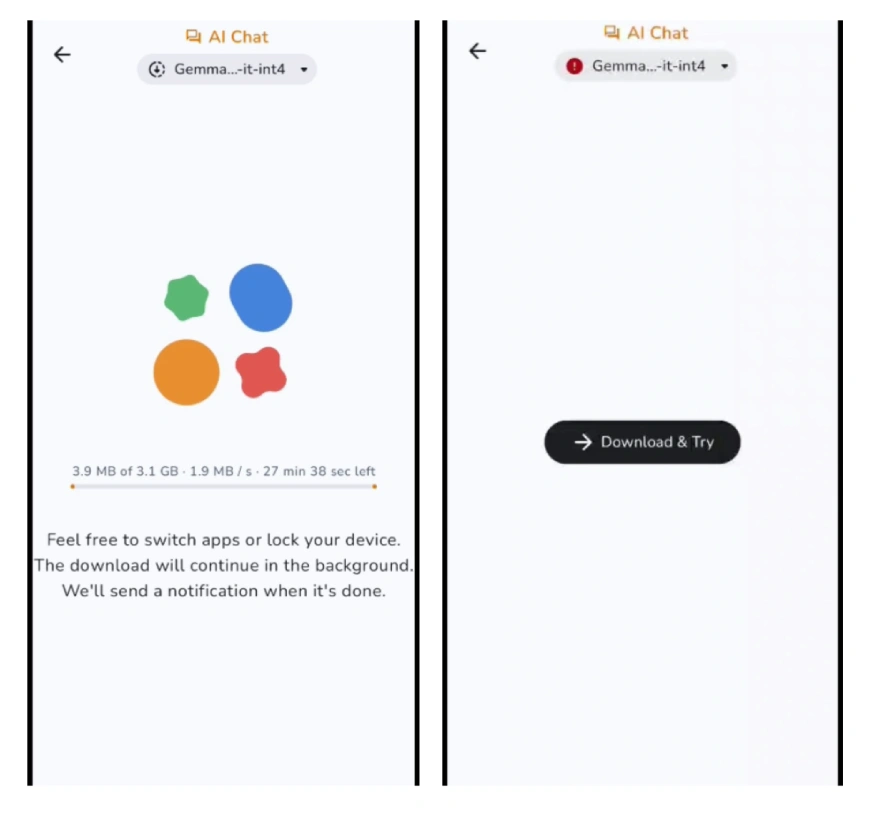

Step 2: Obtain the Gemma 3n Mannequin

You will discover it by looking for “Gemma 3n” within the mannequin repositories like Hugging Face, or you can search on Google and discover Google’s AI mannequin releases straight.

Be aware: Ensure to pick out the quantized (ex, 4-bit or 8-bit) model for cellular to save lots of area and reminiscence.

Step 3: Importing the Mannequin into Your Cellular App

- Now launch your LLM app (ex., LM Studio, Mlc Chat).

- Click on the “Import” or “Add Mannequin” button.

- Then browse to the Gemma 3n mannequin file you downloaded and import it.

Be aware: The app might stroll you thru further optimizations or quantization to make sure cellular operate.

Step 4: Setup Mannequin Preferences

Configure choices for efficiency vs accuracy (decrease quantization = sooner, larger quantization = higher output, slower). Create, if desired, immediate templates, types of conversations, integrations, and so forth.

Step 5: Now, We Can Begin Utilizing Gemma 3n

Use the chat or immediate interface to speak with the mannequin. Be happy to ask questions, generate textual content, or use it as a author/coder assistant in keeping with your preferences.

Options for Getting the Finest Outcomes

- Shut background applications to recycle system assets.

- Use the latest model of your app for greatest efficiency.

- Regulate settings to search out a suitable stability of efficiency to high quality in keeping with your wants.

Attainable Makes use of

- Draft non-public emails and messages.

- Translation and summarization in real-time.

- On-device code help for builders.

- Brainstorming concepts, drafting tales or weblog content material whereas on the go.

Additionally Learn: Construct No-Code AI Brokers on Your Cellphone for Free with the Replit Cellular App!

Conclusion

When utilizing Gemma 3n on a cellular system, there isn’t any scarcity of potential use instances for superior synthetic intelligence proper in your pocket, with out compromising privateness and comfort. Whether or not you’re a informal person of AI applied sciences with a little bit curiosity, a busy skilled in search of productiveness boosts, or a developer with an curiosity in experimentation, Gemma 3n provides each alternative to discover and personalize expertise. With some ways to innovate, you’ll uncover new methods to streamline actions, set off new insights, and construct connections, with out an web connection. So strive it out, and see how a lot AI can help your on a regular basis life, and all the time be on the go!

Login to proceed studying and revel in expert-curated content material.