For all of the duties associated to knowledge science and machine studying, an important factor that defines how a mannequin will carry out relies on how good our knowledge is. Python Pandas and SQL are among the many highly effective instruments that may assist in extracting and manipulating knowledge effectively. By combining these two collectively, knowledge analysts can carry out advanced evaluation on even giant datasets. On this article, we’ll discover how one can mix Python Pandas with SQL to reinforce the standard of knowledge evaluation.

Pandas and SQL: Overview

Earlier than utilizing Pandas and SQL collectively. First, we’ll undergo Pandas and SQL is able to and their key options.

What’s Pandas?

Pandas is a software program library written for Python programming language for knowledge manipulation and evaluation. It presents operations for manipulating tables, knowledge constructions, and time sequence knowledge.

Key Options of Pandas

- Pandas DataFrames enable us to work with structured knowledge.

- It presents completely different functionalities like sorting, grouping, merging, reshaping, and filtering knowledge.

- It’s environment friendly in dealing with lacking knowledge values.

Be taught Extra: The Final Information to Pandas For Information Science!

What’s SQL?

SQL stands for Structured Question Language, which is used for extracting, managing, and manipulating relational databases. It’s helpful in dealing with structured knowledge by incorporating relations amongst entities and variables. It permits for inserting, updating, deleting, and managing the saved knowledge in tables.

Key Options of SQL

- It gives a sturdy approach for querying giant datasets.

- It permits the creation, modification, and deletion of database schemas.

- The syntax of SQL is optimized for environment friendly and sophisticated question operations like JOIN, GROUPBY, ORDER BY, HAVING, utilizing sub-queries.

Be taught Extra: SQL For Information Science: A Newbie’s Information!

Why Mix Pandas with SQL?

Utilizing Pandas and SQL collectively makes the code extra readable and, in sure instances, simpler to implement. That is true for advanced workflows, as SQL queries are a lot clearer and simpler to learn than the equal Pandas code. Furthermore, a lot of the relational knowledge originates from databases, and SQL is without doubt one of the predominant instruments to cope with relational knowledge. This is without doubt one of the predominant the explanation why working professionals like knowledge analysts and knowledge scientists choose to combine their functionalities.

How Does pandasql Work?

To mix SQL queries with Pandas, one wants a typical bridge between these two, so to beat this drawback, ‘pandasql’ comes into the image. Pandasql permits you to run SQL queries instantly inside Pandas. On this approach, we will seamlessly use the SQL syntax with out leaving the dynamic Pandas atmosphere.

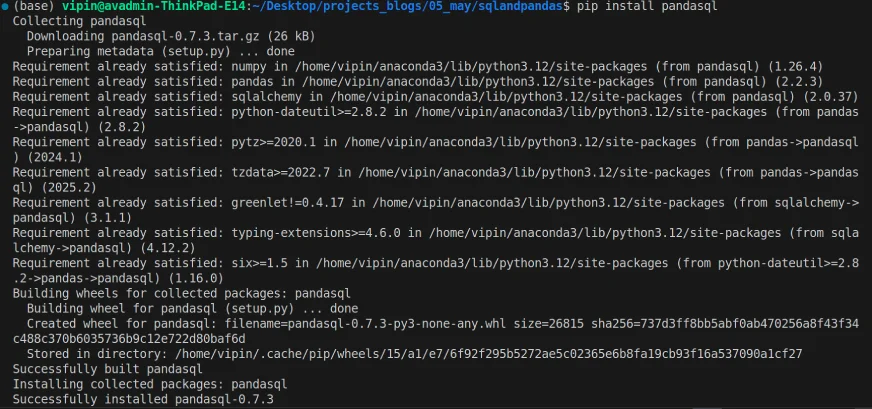

Putting in pandasql

Step one to utilizing Pandas and SQL collectively is to put in pandasql into the environment.

pip set up pandasql

As soon as the set up is full, we will import the pandasql into our code and use it to execute the SQL queries on Pandas DataFrame.

Operating SQL Queries in Pandas

As soon as the set up is over, we will import the pandasql and begin exploring it.

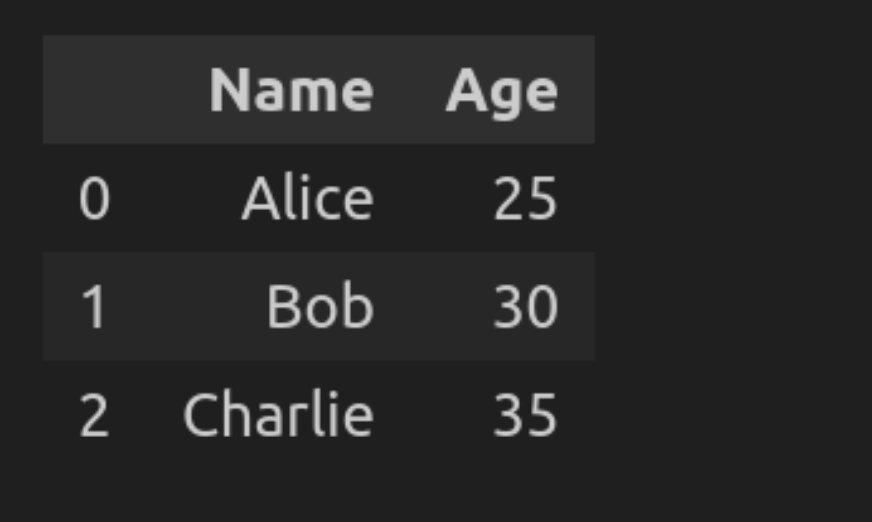

import pandas as pd

import pandasql as psql

# Create a pattern DataFrame

knowledge = {'Identify': ['Alice', 'Bob', 'Charlie'], 'Age': [25, 30, 35]}

df = pd.DataFrame(knowledge)

# SQL question to pick out all knowledge

question = "SELECT * FROM df"

outcome = psql.sqldf(question, locals())

outcome

Let’s break down the code

- pd.DataFrame will convert the pattern knowledge right into a tabular format.

- question (SELECT * FROM df) will choose every thing within the type of the DataFrame.

- psql.sqldf(question, locals()) will execute the SQL question on the DataFrame utilizing the native scope.

Information Evaluation with pandasql

As soon as all of the libraries are imported, it’s time to carry out the info evaluation utilizing pandasql. The under part reveals just a few examples of how one can improve the info evaluation by combining Pandas and SQL. To do that:

Step 1: Load the Information

# Required libraries

import pandas as pd

import pandasql as ps

import plotly.specific as px

import ipywidgets as widgets

# Load the dataset

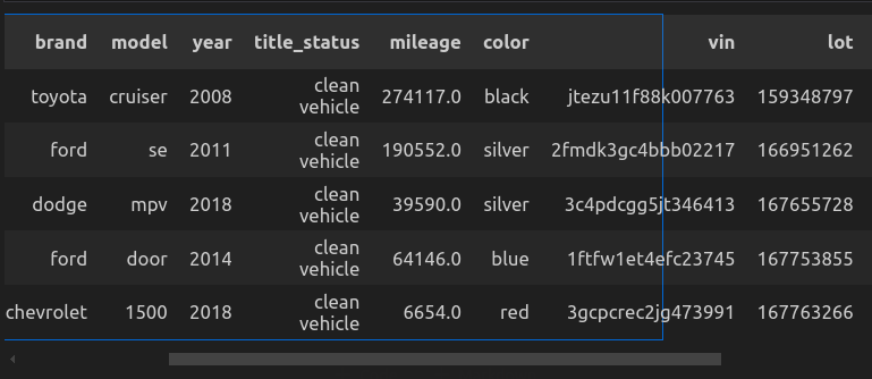

car_data = pd.read_csv("cars_datasets.csv")

car_data.head()

Let’s break down the code

- Importing the mandatory libraries: pandas for dealing with knowledge, pandasql for querying the DataFrames, plotly for making interactive plots.

- pd.read_csv(“cars_datasets.csv”) to load the info from the native listing.

- car_data.head() will show the highest 5 rows.

Step 2: Discover the Information

On this part, we’ll attempt to get accustomed to knowledge by exploring issues just like the names of columns, the info kind of the options, and whether or not the info has any null values or not.

- Examine the column names.

# Show column names

column_names = car_data.columns

column_names

"""

Output:

Index(['Unnamed: 0', 'price', 'brand', 'model', 'year', 'title_status',

'mileage', 'color', 'vin', 'lot', 'state', 'country', 'condition'],

dtype="object")

""”- Determine the info kind of the columns.

# Show dataset data

car_data.data()

"""

Ouput:

<class 'pandas.core.body.DataFrame'>

RangeIndex: 2499 entries, 0 to 2498

Information columns (complete 13 columns):

# Column Non-Null Rely Dtype

--- ------ -------------- -----

0 Unnamed: 0 2499 non-null int64

1 worth 2499 non-null int64

2 model 2499 non-null object

3 mannequin 2499 non-null object

4 yr 2499 non-null int64

5 title_status 2499 non-null object

6 mileage 2499 non-null float64

7 shade 2499 non-null object

8 vin 2499 non-null object

9 lot 2499 non-null int64

10 state 2499 non-null object

11 nation 2499 non-null object

12 situation 2499 non-null object

dtypes: float64(1), int64(4), object(8)

reminiscence utilization: 253.9+ KB

"""- Examine for Null values.

# Examine for null values

car_data.isnull().sum()

"""Output:

Unnamed: 0 0

worth 0

model 0

mannequin 0

yr 0

title_status 0

mileage 0

shade 0

vin 0

lot 0

state 0

nation 0

situation 0

dtype: int64

"""Step 3: Analyze the Information

As soon as now we have loaded the dataset into the workflow. Now we are going to start by performing knowledge evaluation.

Examples of Information Evaluation with Python Pandas and SQL

Now let’s attempt utilizing pandasql to investigate the above dataset by working a few of our queries.

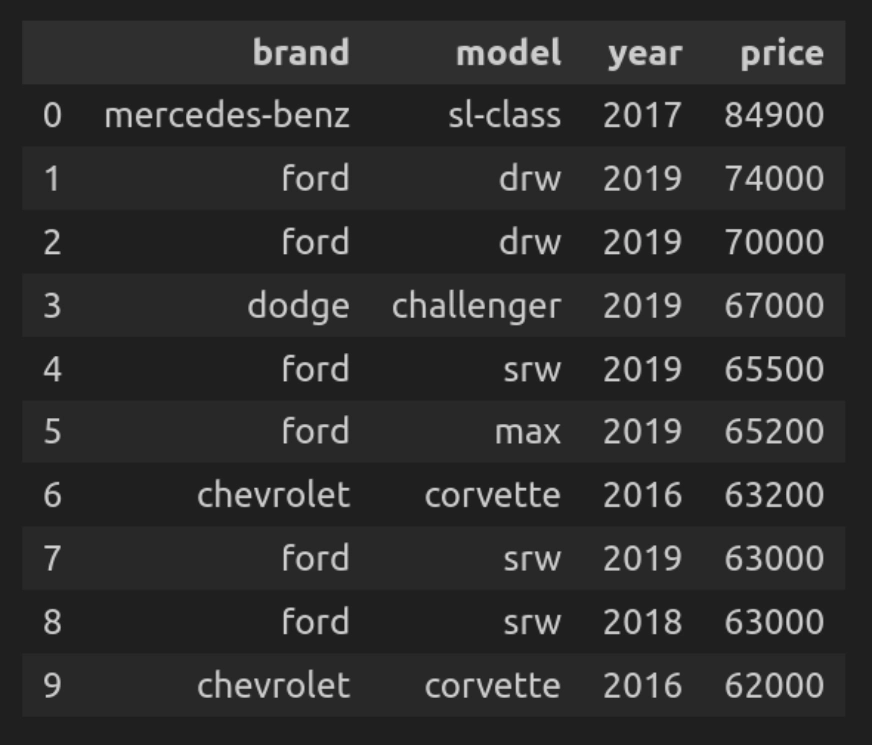

Question 1: Choosing the ten Most Costly Vehicles

Let’s first discover the highest 10 most costly automobiles from all the dataset.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT model, mannequin, yr, worth

FROM car_data

ORDER BY worth DESC

LIMIT 10

""")

Let’s break down the code

- q(question) is a customized perform that executes the SQL question on the DataFrame.

- The question iterates over the whole dataset and selects columns akin to model, mannequin, yr, worth, after which types them by worth in descending order.

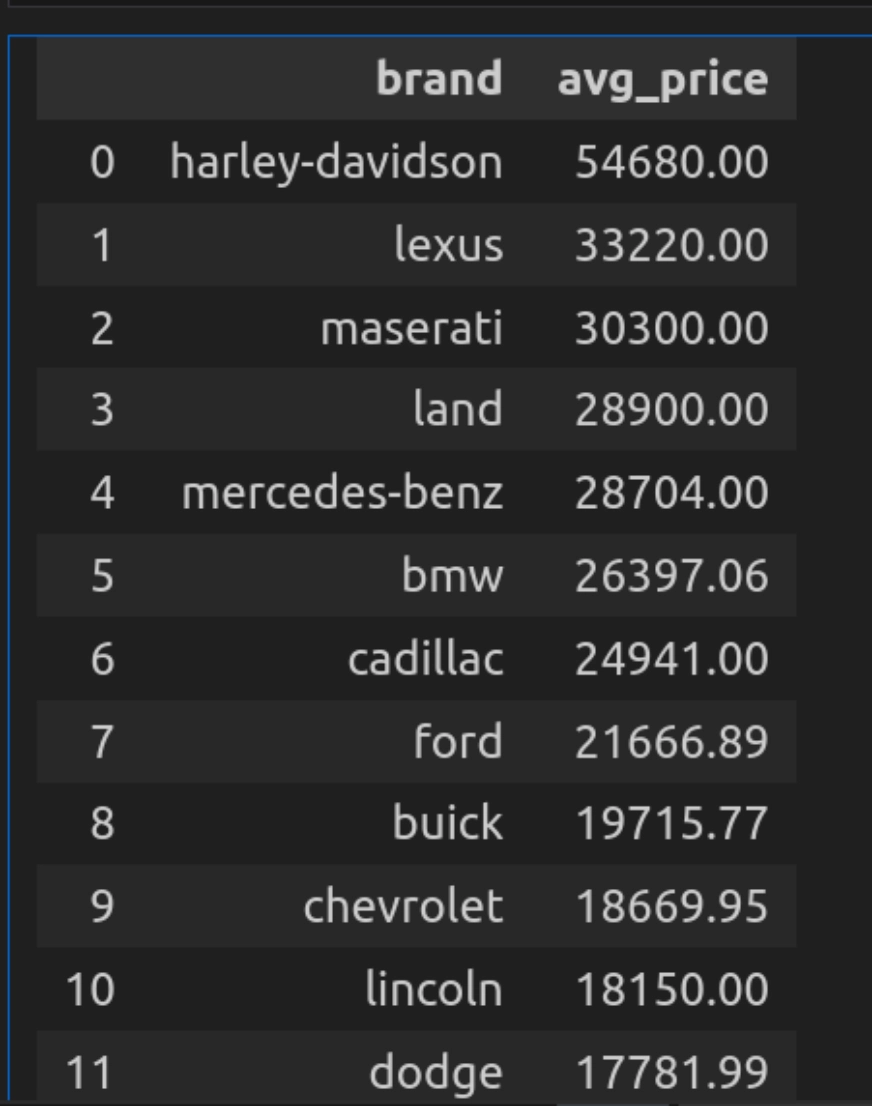

Question 2: Common Worth by Model

Right here we’ll discover the common worth of automobiles for every model.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT model, ROUND(AVG(worth), 2) AS avg_price

FROM car_data

GROUP BY model

ORDER BY avg_price DESC""")

Let’s break down the code

- Right here, the question makes use of AVG(worth) to calculate the common worth for every model and makes use of spherical, spherical off the resultant to 2 decimals.

- And GROUPBY will group the info by the automotive manufacturers and type it by utilizing the AVG(worth) in descending order.

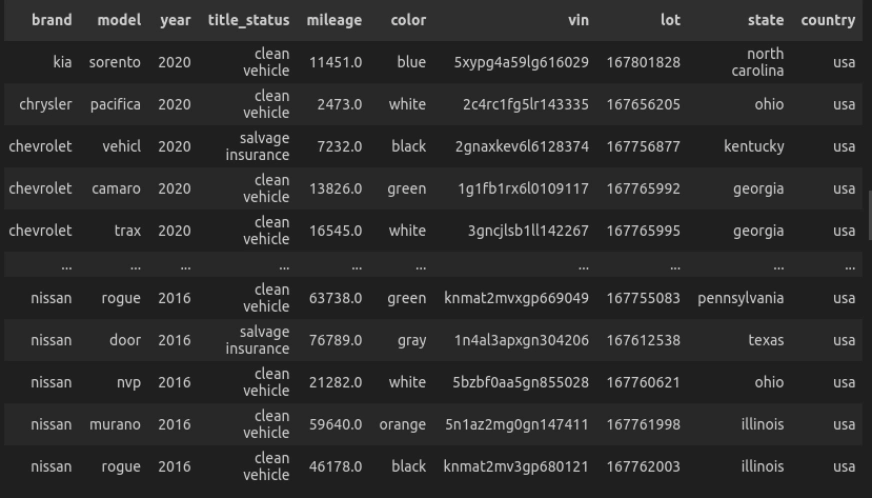

Question 3: Vehicles Manufactured After 2015

Let’s make a listing of the automobiles manufactured after 2015.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT *

FROM car_data

WHERE yr > 2015

ORDER BY yr DESC

""")

Let’s break down the code

- Right here, the question selects all of the automotive producers after 2015 and orders them in descending order.

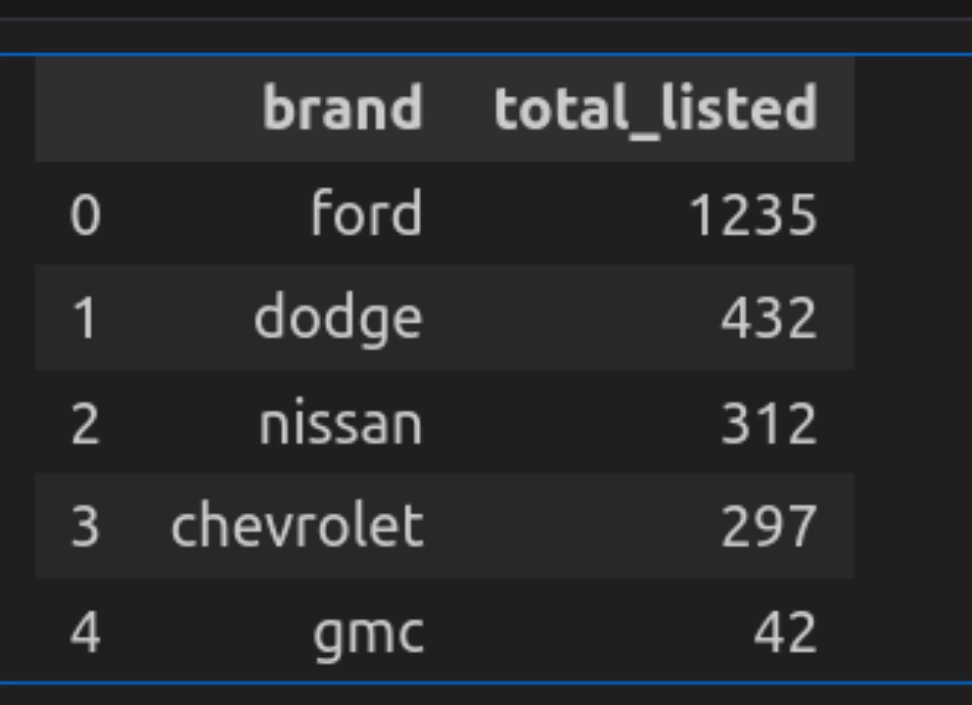

Question 4: Prime 5 Manufacturers by Variety of Vehicles Listed

Now let’s discover the full variety of automobiles produced by every model.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT model, COUNT(*) as total_listed

FROM car_data

GROUP BY model

ORDER BY total_listed DESC

LIMIT 5

""")

Let’s break down the code

- Right here the question counts the full variety of automobiles by every model utilizing the GROUP BY operation.

- It lists them in descending order and makes use of a restrict of 5 to select solely the highest 5.

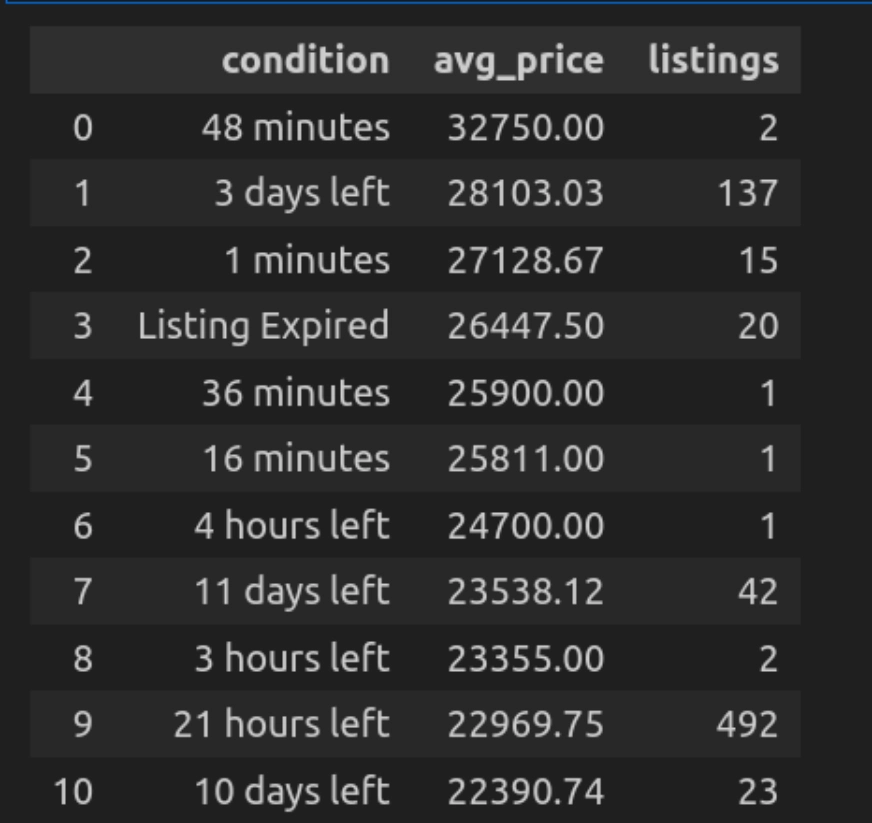

Question 5: Common Worth by Situation

Let’s see how we will group the automobiles based mostly on a situation. Right here, the situation column reveals the time when the itemizing was added or how a lot time is left. Based mostly on that, we will categorize the automobiles and get their common pricing.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT situation, ROUND(AVG(worth), 2) AS avg_price, COUNT(*) as listings

FROM car_data

GROUP BY situation

ORDER BY avg_price DESC

""")

Let’s break down the code

- Right here, the question teams the automobiles on situation (akin to new or used) and calculates the worth utilizing AVG(Worth).

- Organize them in descending order to indicate the costliest automobiles first.

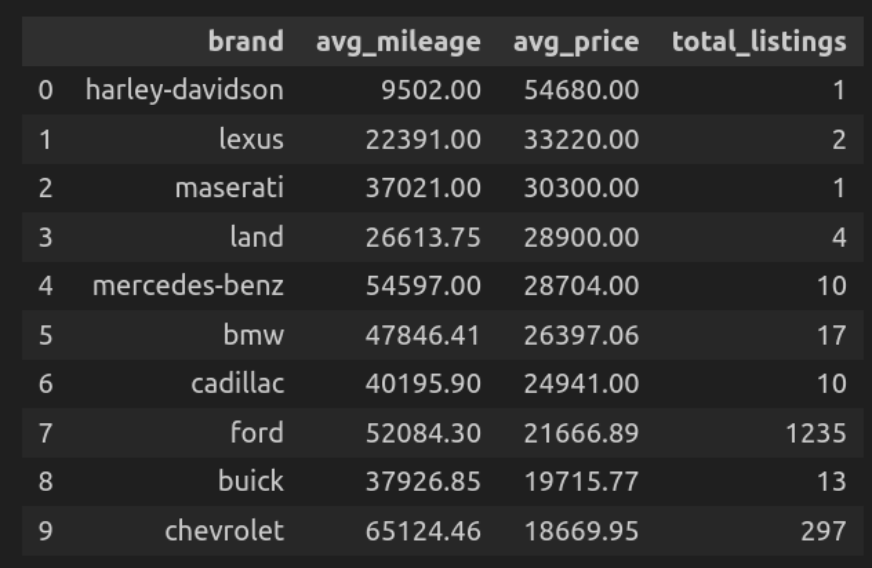

Question 6: Common Mileage and Worth by Model

Right here we’ll discover the common mileage of the automobiles for every model and their common worth.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT model,

ROUND(AVG(mileage), 2) AS avg_mileage,

ROUND(AVG(worth), 2) AS avg_price,

COUNT(*) AS total_listings

FROM car_data

GROUP BY model

ORDER BY avg_price DESC

LIMIT 10

""")

Let’s break down the code

- Right here, the question teams the automobiles utilizing model and calculates their common mileage and common worth, and counts the full variety of listings of every model in that group.

- Organize them in descending order by worth.

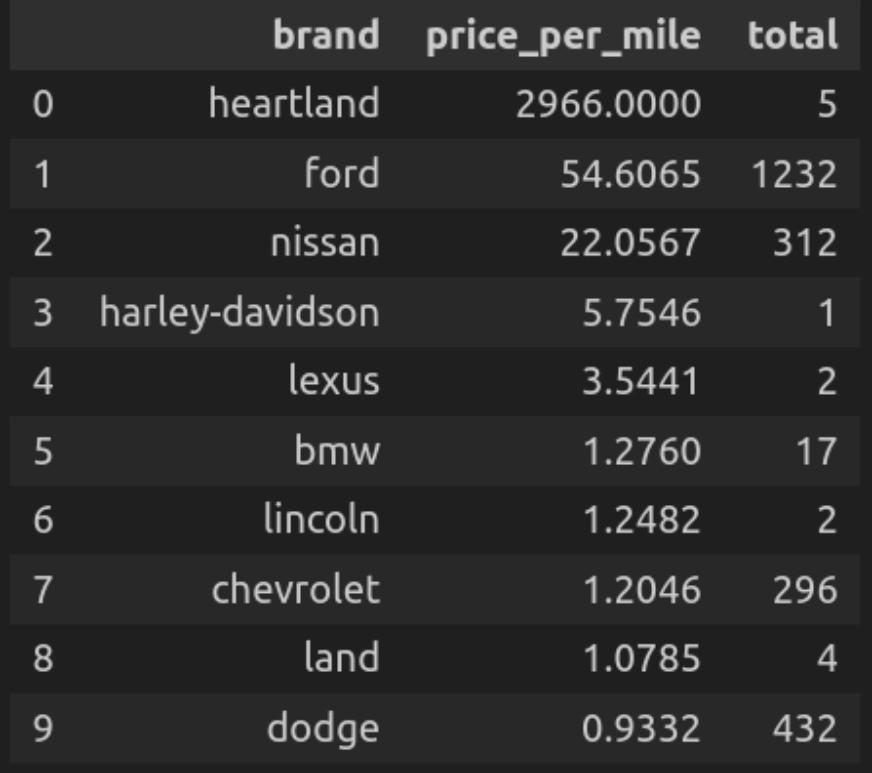

Question 7: Worth per Mileage Ratio for Prime Manufacturers

Now let’s type the highest manufacturers based mostly on their calculated mileage ratio i.e. the common worth per mile of the automobiles for every model.

def q(question):

return ps.sqldf(question, {'car_data': car_data})

q("""

SELECT model,

ROUND(AVG(worth/mileage), 4) AS price_per_mile,

COUNT(*) AS complete

FROM car_data

WHERE mileage > 0

GROUP BY model

ORDER BY price_per_mile DESC

LIMIT 10

""")

Let’s break down the code

- Right here question calculates the worth per mileage for every model after which reveals automobiles by every model with that particular worth per mileage. In descending order by worth per mile.

Question 8: Common Automobile Worth by Space

Right here we’ll discover and plot the variety of automobiles of every model in a specific metropolis.

state_dropdown = widgets.Dropdown(

choices=car_data['state'].distinctive().tolist(),

worth=car_data['state'].distinctive()[0],

description='Choose State:',

format=widgets.Format(width="50%")

)

def plot_avg_price_state(state_selected):

question = f"""

SELECT model, AVG(worth) AS avg_price

FROM car_data

WHERE state="{state_selected}"

GROUP BY model

ORDER BY avg_price DESC

"""

outcome = q(question)

fig = px.bar(outcome, x='model', y='avg_price', shade="model",

title=f"Common Automobile Worth in {state_selected}")

fig.present()

widgets.work together(plot_avg_price_state, state_selected=state_dropdown)

Let’s break down the code

- State_dropdown creates a dropdown to pick out the completely different US states from the info and permits the consumer to pick out a state.

- plot_avg_price_state(state_selected) executes the question to calculate the common worth per model and offers a bar chart utilizing plotly.

- widgets.work together() hyperlinks the dropdown with the perform so the chart can replace by itself when the consumer selects a special state.

For the pocket book and the dataset used right here, please go to this hyperlink.

Limitations of pandasql

Although pandasql presents many environment friendly functionalities and a handy technique to run SQL queries with Pandas, it additionally has some limitations. On this part, we’ll discover these limitations and take a look at to determine when to depend on conventional Pandas or SQL, and when to make use of pandasql.

- Not appropriate with giant datasets: Whereas we run the pandasql question creates a replica of the info in reminiscence earlier than full execution of the present question. This methodology of executing the over queries coping with giant datasets can result in excessive reminiscence utilization and gradual execution.

- Restricted SQL Options: pandasql helps many fundamental SQL options, but it surely fails to completely implement all of the superior options like subqueries, advanced joins, and window features.

- Compatibility with Complicated Information: pandas works properly with tabular knowledge. Whereas working with advanced knowledge, akin to nested JSON or multi-index DataFrames, it fails to offer the specified outcomes.

Conclusion

Utilizing Pandas and SQL collectively considerably improves the info evaluation workflow. By leveraging pandasql, one can seamlessly run SQL queries within the DataFrames. This helps those that are accustomed to SQL and wish to work in Python environments. This integration of Pandas and SQL combines the flexibleness of each and opens up new prospects for knowledge manipulation and evaluation. With this, one can improve the power to sort out a variety of knowledge challenges. Nonetheless, it’s necessary to contemplate the constraints of pandasql as properly, and discover different approaches when coping with giant and sophisticated datasets.

Login to proceed studying and revel in expert-curated content material.